Table of Contents

Preface

Bitmovin has been “Shaping the Future of Video” for over 10 years now and in addition to our own innovations, we’ve been actively taking part in standardization activities to improve the quality of video technologies for the wider industry. I have been a member and attendant of the Moving Pictures Experts Group for 15+ years and have been documenting the progress since early 2010. Recently, we’ve been working on several new initiatives including the use of learning-based codecs and enhancing support for more energy-efficient media consumption.

The 144th MPEG meeting highlights

The 144th MPEG meeting was held in Hannover, Germany! For those interested, the press release with all the details is available. It’s always great to see and hear about progress being made in person.

The main outcome of this meeting is as follows:

- MPEG issues Call for Learning-Based Video Codecs for Study of Quality Assessment

- MPEG evaluates Call for Proposals on Feature Compression for Video Coding for Machines

- MPEG progresses ISOBMFF-related Standards for the Carriage of Network Abstraction Layer Video Data

- MPEG enhances the Support of Energy-Efficient Media Consumption

- MPEG ratifies the Support of Temporal Scalability for Geometry-based Point Cloud Compression

- MPEG reaches the First Milestone for the Interchange of 3D Graphics Formats

- MPEG announces Completion of Coding of Genomic Annotations

This post will focus on MPEG Systems-related standards and visual quality assessment. As usual, the column will end with an update on MPEG-DASH.

Visual Quality Assessment

MPEG does not create standards in the visual quality assessment domain. However, it conducts visual quality assessments for its standards during various stages of the standardization process. For instance, it evaluates responses to call for proposals, conducts verification tests of its final standards, and so on.

MPEG Visual Quality Assessment (AG 5) issued an open call to study quality assessment for learning-based video codecs. AG 5 has been conducting subjective quality evaluations for coded video content and studying their correlation with objective quality metrics. Most of these studies have focused on the High Efficiency Video Coding (HEVC) and Versatile Video Coding (VVC) standards. To facilitate the study of visual quality, MPEG maintains the Compressed Video for the study of Quality Metrics (CVQM) dataset.

With the recent advancements in learning-based video compression algorithms, MPEG is now studying compression using these codecs. It is expected that reconstructed videos compressed using learning-based codecs will have different types of distortion compared to those induced by traditional block-based motion-compensated video coding designs. To gain a deeper understanding of these distortions and their impact on visual quality, MPEG has issued a public call related to learning-based video codecs. MPEG is open to inputs in response to the call and will invite responses that meet the call’s requirements to submit compressed bitstreams for further study of their subjective quality and potential inclusion into the CVQM dataset.

Considering the rapid advancements in the development of learning-based video compression algorithms, MPEG will keep this call open and anticipates future updates to the call.

Interested parties are kindly requested to contact the MPEG AG 5 Convenor Mathias Wien ([email protected] aachen.de) and submit responses for review at the 145th MPEG meeting in January 2024. Further details are given in the call, issued as AG 5 document N 104 and available from the mpeg.org website.

Learning-based data compression (e.g., for image, audio, video content) is a hot research topic. Research on this topic relies on datasets offering a set of common test sequences, sometimes also common test conditions, that are publicly available and allow for comparison across different schemes. MPEG’s Compressed Video for the study of Quality Metrics (CVQM) dataset is such a dataset, available here, and ready to be used also by researchers and scientists outside of MPEG. The call mentioned above is open for everyone inside/outside of MPEG and allows researchers to participate in international standards efforts (note: to attend meetings, one must become a delegate of a national body).

Bitmovin and the ATHENA research lab have been working together on ML-based enhancements to boost visual quality and improve QoE. You can read more about our published research in this blog post.

MPEG Systems-related Standards

At the 144th MPEG meeting, MPEG Systems (WG 3) produced three news-worthy items as follows:

- Progression of ISOBMFF-related standards for the carriage of Network Abstraction Layer (NAL) video data.

- Enhancement of the support of energy-efficient media consumption.

- Support of temporal scalability for geometry-based Point Cloud Compression (PPC).

ISO/IEC 14496-15, a part of the family of ISOBMFF-related standards, defines the carriage of Network Abstraction Layer (NAL) unit structured video data such as Advanced Video Coding (AVC), High Efficiency Video Coding (HEVC), Versatile Video Coding (VVC), Essential Video Coding (EVC), and Low Complexity Enhancement Video Coding (LCEVC). This standard has been further improved with the approval of the Final Draft Amendment (FDAM), which adds support for enhanced features such as Picture-in-Picture (PiP) use cases enabled by VVC.

In addition to the improvements made to ISO/IEC 14496-15, separately developed amendments have been consolidated in the 7th edition of the standard. This edition has been promoted to Final Draft International Standard (FDIS), marking the final milestone of the formal standard development.

Another important standard in development is the 2nd edition of ISO/IEC14496-32 (file format reference software and conformance). This standard, currently at the Committee Draft (CD) stage of development, is planned to be completed and reach the status of Final Draft International Standard (FDIS) by the beginning of 2025. This standard will be essential for industry professionals who require a reliable and standardized method of verifying the conformance of their implementation.

MPEG Systems (WG 3) also promoted ISO/IEC 23001-11 (energy-efficient media consumption (green metadata)) Amendment 1 to Final Draft Amendment (FDAM). This amendment introduces energy-efficient media consumption (green metadata) for Essential Video Coding (EVC) and defines metadata that enables a reduction in decoder power consumption. At the same time, ISO/IEC 23001-11 Amendment 2 has been promoted to the Committee Draft Amendment (CDAM) stage of development. This amendment introduces a novel way to carry metadata about display power reduction encoded as a video elementary stream interleaved with the video it describes. The amendment is expected to be completed and reach the status of Final Draft Amendment (FDAM) by the beginning of 2025.

Finally, MPEG Systems (WG 3) promoted ISO/IEC 23090-18 (carriage of geometry-based point cloud compression data) Amendment 1 to Final Draft Amendment (FDAM). This amendment enables the compression of a single elementary stream of point cloud data using ISO/IEC 23090-9 (geometry-based point cloud compression) and storing it in more than one track of ISO Base Media File Format (ISOBMFF)-based files. This enables support for applications that require multiple frame rates within a single file and introduces a track grouping mechanism to indicate multiple tracks carrying a specific temporal layer of a single elementary stream separately.

MPEG Systems usually provides standards on top of existing compression standards, enabling efficient storage and delivery of media data (among others). Researchers may use these standards (including reference software and conformance bitstreams) to conduct research in the general area of multimedia systems (cf. ACM MMSys) or, specifically on green multimedia systems (cf. ACM GMSys).

Enhancements to green metadata are welcome and necessary additions to the toolkit for everyone working on reducing the carbon footprint of video streaming workflows. Bitmovin and the GAIA project have been conducting focused research in this area for over a year now and through testing, benchmarking and developing new methods, hope to significantly improve our industry’s environmental sustainability. You can read more about our progress in this report.

MPEG-DASH Updates

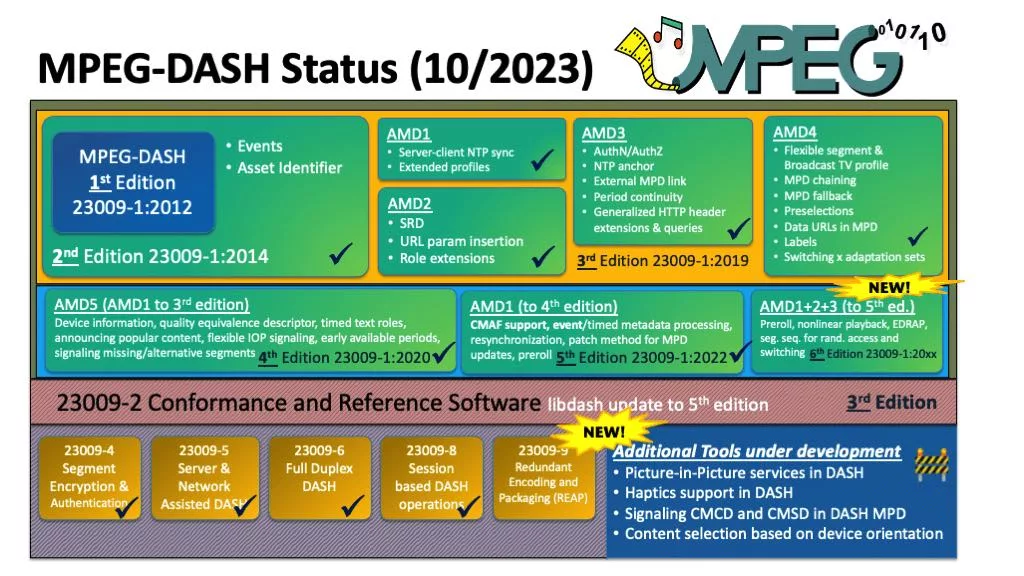

The current status of MPEG-DASH is shown in the figure below with only minor updates compared to the last meeting.

In particular, the 6th edition of MPEG-DASH is scheduled for 2024 but may not include all amendments under development. An overview of existing amendments can be found in the blog post from the last meeting. Current amendments have been (slightly) updated and progressed toward completion in the upcoming meetings. The signaling of haptics in DASH has been discussed and accepted for inclusion in the Technologies under Consideration (TuC) document. The TuC document comprises candidate technologies for possible future amendments to the MPEG-DASH standard and is publicly available here.

MPEG-DASH has been heavily researched in the multimedia systems, quality, and communications research communities. Adding haptics to MPEG-DASH would provide another dimension worth considering within research, including, but not limited to, performance aspects and Quality of Experience (QoE).

The 145th MPEG meeting will be online from January 22-26, 2024. Click here for more information about MPEG meetings and their developments.

Want to learn more about the latest research from the ATHENA lab and its potential applications? check out this post summarizing the projects from the first cohort of finishing PhD candidates.

Notes and highlights from previous MPEG meetings can be found here.