Why is running stream tests important?

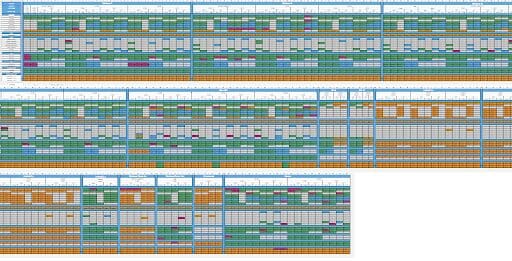

Ensuring the highest quality of experience with our solutions has always been one of the main drivers of our goals at Bitmovin, and it’s what played a big part in the creation of our test automation team. To get releases out faster and speed up our testing times, it was a necessity that we build out our testing system and change it from manual to automated. However, getting started with testing and providing quality assurance for a product line wasn’t easy as test cases aren’t always clear and the process of developing them can be grueling. Many teams have been there, especially when you start from scratch on a new excel, listing out all of the stream test cases you want to cover. Manual stream testing was taking too long for us and as I said before, we concentrated on ensuring quality which meant if we had one failed test, we had to run the entire cadence over again until every test stream passed to make sure we didn’t introduce a regression.

Why Automation and what is Stream Lab?

Depending on the number of test stream cases you have or the size of the team, manual testing can make sense, but for many in SaaS, this isn’t the case. The idea behind automation is a clear winner, as you save your team time by not having to manually do the tests, input all results into a testing doc, and then not having to refresh the lists every time you need to test again. We knew this is what we needed internally, however, it is also where the concept for Stream Lab came from, with the goal of creating the first automated streaming test solution that was client-facing. Filled with pre-set test cases for each use case that came from our internal workflow, it focuses on making development teams’ lives easier and enabling them to see how an active stream performs in a real streaming environment on a physical device that they currently do or don’t support yet. It is also meant to help them prioritize other tasks and gives a clear outlook on the complete streaming workflow.

How did it get started?

Now, developing an automated system is not something that can be done overnight and definitely takes enough team members that are dedicated to it to implement it correctly, so if you run or are in a small team, it can be tough. At Bitmovin, we’ve been building up our test stream cases and system for years now, taking our extensive video streaming knowledge & experience from each workflow we work on and applying it to our test infrastructure. When we got started, we originally tried a hybrid stream tests system that was partially automated but the time it took to input the results and go through them all, delayed us greatly. This made us realize we had to move forwards with building out the plan to go completely automated as the manual workload was getting overwhelming.

Laying the Foundation: The Framework

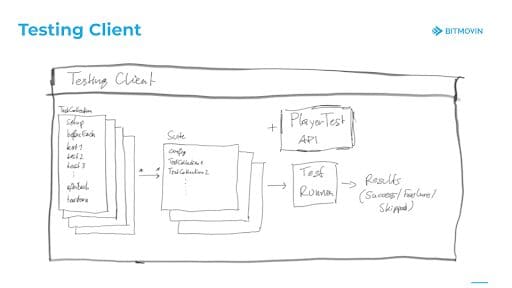

We wrote out our plans to understand each step we had to build out and then got right into constructing the Player streaming test framework. As there wasn’t anything on the market that met our needs, we had to develop an in-house solution from the ground up, tailoring it to our player testing requirements. With the initial steps done for the framework, we noticed the code for the test cases was long and complex, so to minimize the task and make it easier to write player tests, we built a special syntax called “PlayerTest”. This cut down on the amount of code needed and allowed us to write tests in a more fluent and compact fashion, which also made it easier to read and process each test case. Here is an example of this:

Making it visual: Application to Complete Automation

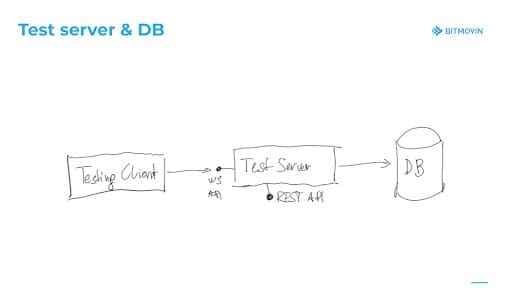

Next, we had to develop and deploy an application where the tests could be monitored and people could easily access it from anywhere. While we were working on the application, we also were adding the “test service”, which is the part of the system that collected and stored the results on the cloud and provided test reports that could be viewed over the app. This was a good point to get to and it was definitely going to help make our lives easier.

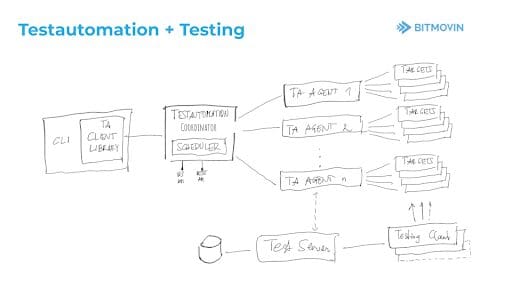

As we had the essential pieces to get the testing done on the devices we wanted to test on, as shown in the layouts above, now the focus was on developing the automation side and merging the pieces together. The test stream automation system was designed as a distributed system and has three parts that all have a specific role and affect each other in different ways.

- The “agents” are deployed locally at our testing sites to control the individual targets (devices & browsers).

- The “coordinator” manages the “agents” globally over the cloud and keeps track of idle & busy targets, scheduling tests accordingly as they queue up.

- Once a test session is started on a target, it loads the “test client”, which executes the tests and reports back the results

With the two now paired, when a test request came in, the test client would be loaded up to the devices, where the results would be sent back and stored in the DB while the “agents” would continuously check on the status of the tests being run and if free, push the next request to the queue.

Throughout the time that we were developing the test streaming automation system, we were also putting together our testing wall of physical devices to guarantee the tests were running correctly and covered our Player’s device support. This portion needed automating as well since the devices needed to be turned on and off without issue, the application had to be deployed to all targets, and to facilitate this, we built another in-house solution as it had fewer limits and was able to cover more devices than other options on the market. This helped us make sure there wasn’t anything we didn’t know about how the Bitmovin Player worked on older and new models of devices, ensuring we were confident in our solution and ability to deliver a high-quality playback experience. Having the physical devices was a huge benefit as well since it allowed us to parallelize the testing, making it so we could do more tests on the same device types which have now been tested for a total of 571,517 minutes (193,412 TV Minutes) over the last 30 days.

Implementing Stream Lab

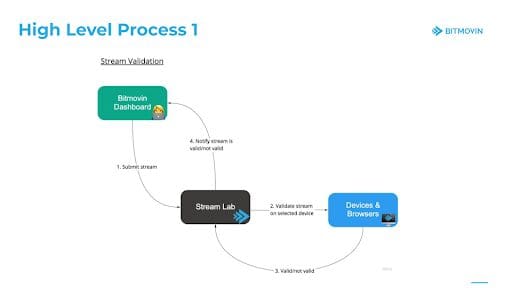

It’s been a long ride to get to this point and it was definitely a learning experience. Our internal test cadence has grown to 1000+ test cases for our player alone and we continue to add more with every new feature or device supported. With Stream Lab, which is now in Beta, we’ve adopted what we’ve learned from the automation of our own testing and achieved the goal of introducing the first client-facing automated testing solution for streaming. Test cases and devices will gradually be added to the solution and will eventually be a fully-fledged testing solution to replace your current process. The one difference, however, between the internal and Stream Lab systems, is that it is accessible through the Bitmovin Dashboard and when you submit your stream to Stream Lab, it goes through a validation process to make sure the stream is good for testing. Once validated, the URL queues to be tested, which can be seen in the workflows below.

Want to test Stream Lab?

We’re really interested to see how Stream Lab can help you and to hear your feedback about where we should take the solution next. We have more than a few ideas, but we’d love to hear yours – let us know your thoughts in Bitmovin Community.

We’ve recently given access to everyone that is trialing Bitmovin or is a current client, so if you already have access to the dashboard you should be able to see “Stream Lab” under the “Player” section. If you’re new and want to check it out, sign up for a trial.

As always: