Latency is a major challenge for the online video industry. This article takes us through what latency is, why it’s important for streaming and how CMAF low latency streaming can help to solve the problems.

Live stream “latency” is the time delay between the transmission of actual live content from the source to when it is received and displayed by the playback device. Or to put it another way, the difference between the moment when the actual event is captured on camera or the live feed comes out of a playout server, and the time when the end user actually sees the content on their device’s screen.

Typical broadcast linear stream delay ranges anywhere from 3-5 seconds whereas online streaming has historically been anywhere from 30 seconds to over 60 seconds depending on the viewing device and the video workflow used.

The challenge for the online streaming industry is to reduce this latency to a range closer to linear broadcast signal latency (3-5 sec) or even lower, depending on the application needs. Therefore, many video providers have taken steps to optimize their live streaming workflows by rolling out new streaming standards like the Common Media Application Format (CMAF) and making changes to encoding, CDN delivery, and playback technologies to close the latency gap and to provide near real-time streaming experience for end-users. This reduced latency for online linear video streaming is commonly referred to as “Low Latency”.

Linear stream/signal latency represents a continuum, as indicated in the diagram above. This diagram illustrates the historic reality of online streaming protocols such as HLS and DASH exhibiting higher latency, and nonadaptive bitrate protocols like RTP/RTSP and WebRTC exhibiting much lower sub-second latency. The discussion here is based on the adaptive bitrate protocols, HLS and MPEG-DASH.

Why is this important for me?

The main goal of Low Latency streaming is to keep playback as close as possible to real-time broadcasts so users can engage and interact with content as it’s unfolding. Typical applications include sports, news, betting, and gaming. Another class of latency-sensitive applications includes feedback data as part of the interactive experience – an example is the ClassPass virtual fitness class, as announced by Bitmovin here.

Other interactive applications include game shows and social engagement. In these use-cases, synchronizing latency across multiple devices becomes valuable for viewers to have a similar chance to answer questions, or provide other interactions.

What is CMAF?

Common Media Application Format (CMAF) was introduced in 2016 and was co-authored by Apple and Microsoft to create a standardized transport container for streaming VoD and linear media using the MPEG-DASH or HLS protocols.

The main goals were:

1) Reduce overhead/encoding and delivery costs through standardized encryption methods

2) simplify complexities associated with video streaming workflows and integrations (ex: DRM, advertising, closed captioning, caching, etc)

3) support a single format that can be used to stream across any online streaming device.

When we originally posted our thoughts on CMAF, adoption was still in its infancy. But, in recent months we have seen increased adoption of CMAF across the video workflow chain and by device manufacturers. As end-user expectations to stream linear content with latency equivalent to traditional broadcast have continued to increase, and content rights to stream real-time have become more and more commonplace, CMAF has stepped in as a viable solution.

What is CMAF Low Latency?

When live streaming, the media (video/audio) is sent in segments that are each a few seconds (2-6 sec) long. This inherently adds a few seconds of delay from transmission to playback as the segments have to be encoded, delivered, downloaded, buffered, and then rendered by the player client, all of which is limited at a minimum by the segment size.

CMAF now comes with a low latency mode where each segment can be split up into smaller units, called “chunks”, greatly reducing latency.

CMAF now comes with a low latency mode where each segment can be split up into smaller units, called “chunks” where each chunk can be 500 milliseconds or lower depending on encoder configurations. With low latency CMAF or chunked CMAF, the player can now request incomplete segments and get all available chunks to render instead of waiting for the full segment to become available, thereby cutting latency down significantly.

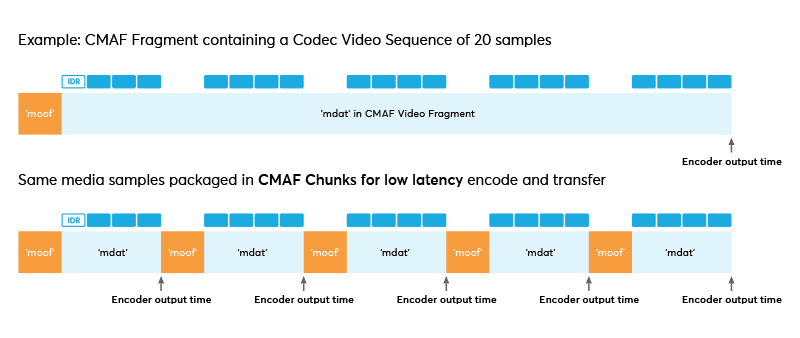

As shown in the diagram above, a “chunk” is the smallest referenceable media unit, by definition, containing a “moof” and “mdat” atom. The mdat holds a single IDR (Instantaneous Decoder Refresh) frame, which is required to begin every “segment”. A “segment” is a collection of one or more “fragments”, and a “fragment” is a collection of one or more chunks. The “moof” box as shown in the diagram, is required by the player for decoding and rendering individual chunks.

At the transmit end of the chain, encoders can output each chunk for delivery immediately after encoding it, and the player can reference and decode each one separately.

What are we doing to solve the latency problem?

The Bitmovin Player has supported CMAF playback for a while now. Recently, we also added support for CMAF low latency playback for HTML5 (web) and native apps (mobile) platforms. The Bitmovin Player can be configured to turn on low latency mode which then enables the player to allow chunk-based decoding and rendering without having to wait for the full segment to be downloaded.

The Bitmovin Player optimizes start-up logic, determines buffer sizes, and adjusts playback rate to achieve near to real live streaming latency. From our testing, this can go as low as 1.8 seconds while maintaining stream stability and good video quality.

CMAF low latency is compatible with the rest of the features that Bitmovin Player already supports today. (Ex: ads, DRM, analytics, closed captioning).

In the diagram shown above, player buffering and decoding behavior is shown, contrasting the standard segment (standard latency) mode with the chunked segment mode, corresponding to low latency streaming.

The diagram shows that in non-chunked segments, with a segment size of 4xC (where C is the size of the lowest granularity unit, the chunk, measured in milliseconds) and three-segment buffering, a 14xC-second player latency is typically achieved.

In contrast, chunked segments with CMAF are shown to achieve a 2xC second latency as opposed to a 14xC-second latency, thereby achieving a 7 times improvement in latency.

Are there any trade-offs?

In short, yes. There are some considerations, and some tradeoffs when trying to achieve low latency while still providing a high-quality viewing experience.

Buffer Size: Ideally, we want to render frames as soon as the player receives them. This means we have to maintain a really small buffer size. But, this also introduces instability in the viewing experience especially when the player encounters any unexpected interruptions (like dropped frames or frame bursts) due to network or encoder issues. Without enough locally stored frames, the player stalls or freezes until the buffer refreshes with new frames. This in turn requires the player to re-synch its presentation timing and leads to perceived distortions in the playback experience. Therefore, it’s recommended to maintain at least a 1-second buffer to allow the player to provide a smoother playback experience for viewers that can withstand some network disruptions.

DRM is another factor that might introduce additional delay in start-up time, the license delivery turnaround time will block content playback even though low latency is turned on. In this case, the player adjusts to the latest live frame upon successful license delivery, and the latency is consistent with the set low latency value.

How can I monitor these tradeoffs?

For all of the above reasons, balancing a robust, scalable online streaming platform with minimal re-buffering and stream interruptions against the time-sensitive behavior of low latency CMAF streaming can be challenging. The solution is a holistic view of the streaming experience, provided by Bitmovin Analytics.

Bitmovin Analytics provides insights into session quality so customers can monitor the performance of low latency streaming sessions and make real-time decisions to adjust player and encoding configurations to improve the experience. Bitmovin offers all existing video quality metrics (e.g. Startup time, Buffer Rate) and a few additional metrics to specifically monitor low latency streaming at a content level, such as:

- Target Latency

- Observed Latency

- Playback Rate

- Dropped Frames

- Bandwidth Used

Besides the player, what else causes latency?

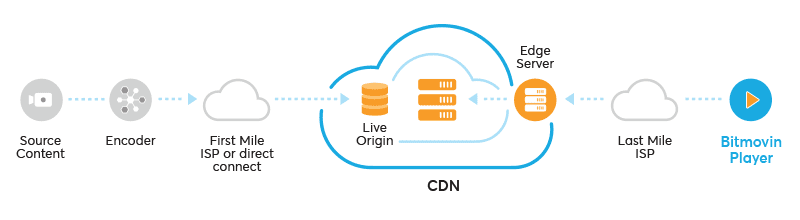

Chunked CMAF streams and low latency-enabled players are key elements in reducing latency in online streaming. However, there are other components in the video delivery chain that introduce latency at each step that need to be considered for further optimization:

- Encoder: The encoder needs to be able to ingest live streams as quickly as possible with the encoding configuration optimized to produce the right size of chunks and segments that can then be uploaded to the Origin Server for delivery.

- First Mile Upload: The upload time depends on the connection type at the upload facility (wired, wireless) and affects overall latency.

- CDN: The CDN technologies need to allow for chunk-based transfers and to adopt the right caching strategies to propagate chunks across the different delivery nodes in a time-sensitive fashion.

- Last Mile: The end user’s network conditions also influence overall latency i.e. if the user is on a wired or WiFi or cellular connection. It also depends on how close the user is to the CDN edge.

- Playback: As discussed earlier, the player needs to optimize start behavior and balance buffering and playback rate to enable quick download and rendering to always be as close as possible to live time.

These steps are shown below in the end-to-end video flow diagram.

With chunked segments, from our testing, we’ve seen end-to-end latency as low as 1.8 seconds. However, the customer needs to consider their entire workflow set up to ensure latency is optimized along the full chain to achieve the lowest latency achievable with their specific workflow and network.

In conclusion …

As viewers migrate from a large screen TV by appointment experience to a time-shifted, place-shifted experience with multi-device online streaming, content producers and rights holders have responded by getting more premium content available online, as well as brand new classes of media experiences online involving interactivity and an emphasis on low latency delivery and playback.

The Bitmovin low latency solution was shown here to consist of the Bitmovin Player and Bitmovin Analytics products working together to balance the needs of low latency live streaming on multi-devices while providing the level of insights needed to proactively determine the viewers’ quality of experience, and to take action in case undesired consequences appear as a result of low latency streaming.

Video technology guides and articles

- Back to Basics: Guide to the HTML5 Video Tag

- What is a VoD Platform?A comprehensive guide to Video on Demand (VOD)

- Video Technology [2022]: Top 5 video technology trends

- HEVC vs VP9: Modern codecs comparison

- What is the AV1 Codec?

- Video Compression: Encoding Definition and Adaptive Bitrate

- What is adaptive bitrate streaming

- MP4 vs MKV: Battle of the Video Formats

- AVOD vs SVOD; the “fall” of SVOD and Rise of AVOD & TVOD (Video Tech Trends)

- MPEG-DASH (Dynamic Adaptive Streaming over HTTP)

- Container Formats: The 4 most common container formats and why they matter to you.

- Quality of Experience (QoE) in Video Technology [2022 Guide]