Containerization has opened up new possibilities in performance and efficiency and is a perfect fit for video encoding

The difference between containerization and virtualization?

Server-side technology has been on a steep development curve over recent years, as architects and system engineers have worked to streamline and optimize their workflows, as well as improve the performance of their applications. Virtualization was one of the major breakthroughs on this curve and has now been a part of the IT landscape for a long time. By running multiple virtual machines (VMs) on a single server, each running its own operating system and staying independent from each other, IT departments have been able to better utilize their hardware resources and optimize their load management.

Containerization has taken virtualization one step further by allowing applications to run in dedicated environments that are in turn hosted by a common operating system kernel. By utilizing a shared OS kernel, containers are portable, light on resources and extremely scalable making them a much more powerful and versatile solution than VMs.

The main advantages of containers

The characteristics that make containers so easily portable, are the same characteristics that make them so perfect for modern cloud-based architecture.

First, each container runs without dependencies so the developer has complete control over the environment that their app runs in without being limited by an inflexible OS. Furthermore, this same independence ensures that the environment will be the same anywhere, whether it is deployed in the public cloud, on a private cloud or in the local test environment.

The fact that containers share an OS kernel makes them much smaller than a VM, which requires its own OS. This makes it very easy to scale containers across a cloud cluster, because they use fewer resources. It also makes them much more efficient in terms of CPU usage, memory and storage. All of this makes for a solution that is fast to initialize, quick to respond and most importantly scales massively in a matter of seconds.

Containerized Video Encoding

Linear vs Containerized

Before we start looking into containerized encoding, it is best to run through the linear approach that is employed by the majority of encoding services and systems. This approach is to input a video and simply crunch through the file from start to finish, writing each segment into the encoded profiles in chronological order. In our tests, encoding to a standard bitrate ladder, with FFMPEG or similar solutions on a 8-core machine, usually resulted in a 0.3x to 1x realtime factor.

| h264 | 1080p | 4.8 MBit/s |

| h264 | 720p | 2.4 MBit/s |

| h264 | 480p | 1.2 MBit/s |

| h264 | 360p | 0.8 MBit/s |

| h264 | 240p | 0.4 MBit/s |

The very nature of adaptive bitrate streaming makes it perfect for a containerized approach.

The key to exploiting the versatility and power of containers in a video encoding service is to scale horizontally. This horizontal scaling allows us to encode each segment separately which in turn makes it is possible to spread a single video file across multiple instances, and encode them simultaneously. The immediate effect of this is that the video encoding can be completed much faster simply by adding more containerized encoding instances. We have actually tracked up to 100x real time, but in addition to speed there are a number of other very important benefits to the containerized approach.

Advantages and Opportunities

By approaching the encoding process segment by segment, we can see that what was once a large process, has become a lot of very small processes. If we combine this with the opportunities that containerization presents, then we have the possibility to completely re-engineer the encoding workflow to much better meet the specific needs of a particular business model.

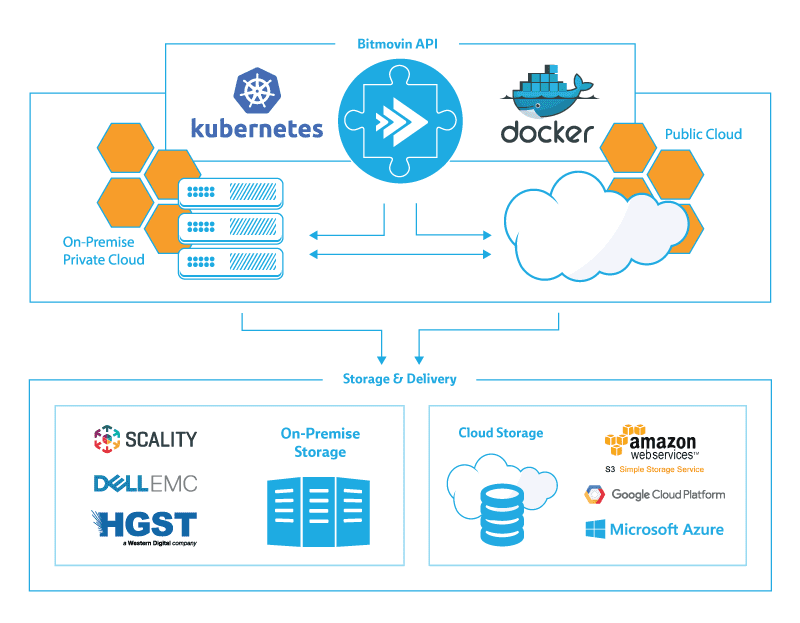

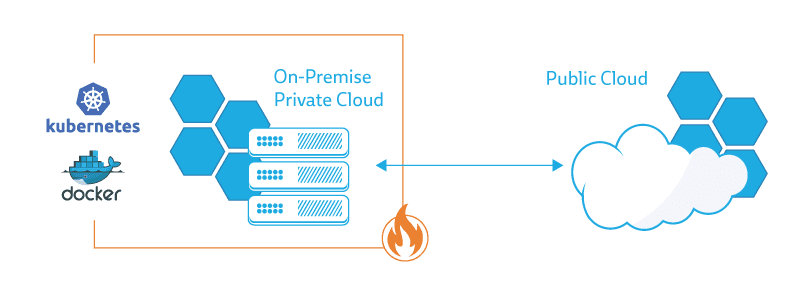

On-Prem, Cloud and Hybrid Cloud

Exploiting the massive resources that are available on the public cloud is just the beginning of the containerized encoding story. By using a true container based encoding service, it is possible to run the same environment in the public cloud, on a private cloud, or across both simultaneously. This creates a whole new level of resource management, where you no longer need to decide whether to run your service on-prem or in the cloud. The nature of the containerized system allows you to create a unified infrastructure that incorporates both zones within the same system. This allows you to switch between on-prem and public cloud as required. This might be because your on-prem hardware maxes out due to heavy demand and needs to push overflow to the public cloud, or it might be because you have sensitive data that needs to stay behind your firewall.

Utilizing Spot Instances

Spot instances are a great way to save money and have become a popular resource for many companies running processes in the cloud. Amazon has turned this into a market-driven system where you can actually bid your own price, and compete for available spot instances. The flipside of the lower price, is that reliability of your instance cannot be guaranteed. A higher bidder can take an instance away from you without notice. In a linear workflow this can lead to a complete loss of your encoding, forcing you to restart a job again from the beginning. But in a containerized workflow, losing one instance is almost insignificant. The interrupted segment is simply moved to another instance and the entire job continues without noticeable disruption.

Taking this a step further, it is even possible to mix and match instance types depending on how the spot market develops. The encoding coordinator, which is the instance that controls each encoding job and assigns segments to instances, can be placed on a higher cost instance to ensure that it remains stable. The worker instances, which is where the encodings actually happen, are interchangeable, so can placed on lower cost, higher risk instances. For example; If c4.2xlarge instances are in short supply on the spot market we could start the coordinator on a c4.xlarge instance and the workers on even bigger instance types (running less instances in turn). If we see the spot price drop for another instance type (c3.2xlarge for example) we can switch to these and decommission the more expensive nodes we’ve been using so far.

Security

A containerized encoding approach accommodates virtual private cloud (VPC) setups where customers can use their own accounts with AWS, Google Cloud Platform, Azure, etc. This provides companies the benefit of ensuring that media files never leave their VPC, while having all the features and scalability of a cloud-based SaaS encoding service. This is especially useful for sensitive content and highly secured workflows.

Hardware Agnostic

As explained previously, the nature of containerization makes it very easy to run on any standard hardware. In the case of video encoding, this is a game changer. Up until now companies looking to run video encoding processes on-prem have been required to invest heavily in dedicated hardware encoders. These hardware encoders are expensive to purchase and to maintain, often requiring a dedicated team to maintain them. On top of that, they are generally very inflexible and cannot be upgraded to cater to new technologies such as AV1. Containerized video encoding makes it possible to replace these dedicated boxes with standard servers. This not only allows companies to utilize their existing hardware infrastructure, but also to move the encoding process to a more standard tool set that can be incorporated into the rest of the IT team.

Speed, Agility and Flexibility

There are many situations where the speed of your encoder is important live streaming, user generated content, and bringing a large VOD library to market, to name just a few. But the most significant aspect of containerization is the ability to adapt to a situation. Every business is different, and requires a slightly different encoding solution. Furthermore, every business changes, and the requirements of an encoding system will also change over time.

Containerization creates a versatile solution, where encodings can be run on-prem, in the cloud, or spread across both. They can be moved in realtime between different zones (Private cloud, Amazon Cloud, Google Cloud etc.), to accommodate demand, security or any other specific requirements that might arise. The nature of the “controller – worker” relationship allows conditions to be built into the workflow to optimize the system on the fly, cutting costs and reducing errors. The possibilities of containerized encoding are endless.

About the Bitmovin Containerized Encoding Solution

When we released Bitmovin Containerized Encoding earlier this year, our goal was to enable our customers to benefit from our fast encoding speeds and flexible API, while at the same time being able to leverage your own existing infrastructures or cloud accounts. By being a managed solution that works through our Bitmovin API, we give you the flexibility to run your base workloads on your own infrastructure, while still being able to offload burst workloads to the cloud. This also adds redundancy to the encoding pipeline, as we use a multi-cloud approach to encoding to be vendor agnostic and infinitely scalable.

This approach allows you to build robust and reliable encoding workflows that can cope with any load while avoiding the pitfalls of over-provisioning or under-provisioning resources in advance, while still giving customers full control over their infrastructure.

To deliver on this goal we decided to base our solution on the open-source container orchestrator Kubernetes that can run virtually anywhere on bare metal and is natively supported by many major cloud vendors like Google, Microsoft, RedHat and IBM.

This also enabled us to streamline the setup process so our customers can get up and running very quickly without requiring lengthy systems integrations (besides a running Kubernetes cluster). Kubernetes enables us to manage all the compute resources required to run our high performance encoding engine on any hardware or cloud infrastructure.

For more information on optimizing your encoding workflow with containerized encoding contact our sales team.

- Learn the theories behind Netflix-Style optimization for quality and CDN Costs in our Per-Title Encoding WEBINAR

This article first appeared in the Streaming media Encoding SuperGuide