For the past few years, AI has been one of the top buzzwords at the NAB Show. While other hot topics like “web3” seem to have peaked and faded, interest in video AI has continued to grow and this year there were more practical solutions being showcased than ever before. A personal highlight for Bitmovin was winning a TV Technology Best of Show award for our AI-powered Analytics session interpreter. Keep reading to learn more about other interesting and useful applications of AI that we saw at NAB 2024.

NAB Video AI Highlights: 2024

While there was some variation in implementation and features, the majority of the AI solutions I encountered at NAB fell into one of these categories:

- Generative AI (genAI) for video creation, post-production, or summaries and descriptions

- Automatic subtitling and captioning with multi-language translations

- Object or event detection and indexing

- Video quality enhancement

This summary is definitely not exhaustive, but highlights some of the things that stood out to me on the show floor and in the conference sessions. Please let us know in the comments if you saw anything else noteworthy.

Booths and Exhibits

Adobe

Adobe has been showing AI-powered editing and post-production tools as part of their creative suite for a couple years now and they seem to be continuously improving. They teased a new Firefly video model that will be coming to Premiere Pro later this year that will enable a few new Photoshop-like tools for video. Generative Extend will allow you to extend clips with AI generated frames for perfectly timed edits and the new Firefly model will also enable object removal, addition, and replacement. They’ve also implemented content credentials into the platform that will signal when generative AI was used in the creation process and which models were used, as they prepare for supporting 3rd party genAI models like OpenAI’s Sora.

Amazon Web Services (AWS)

AWS had one of the busiest booths in the West hall and were showcasing several AI-powered solutions, including using genAI for creating personalized ads and Intel’s Video Super Resolution upscaling. But they also had the most eye-catching and fun application of AI in the South Hall, a genAI golf simulator where you could design and play your own course.

axle.ai

Axle.ai was sharing their face, object, and logo recognition technology that can index recognized objects and search for matching objects in other videos or clips. Their software also has automatic voice transcription and translation capabilities. It can run either on-premises or in the cloud and integrates with Adobe Premiere, Final Cut Pro and other editing suites. While other companies offer similar capabilities, they stood out as being particularly focused on these use cases.

BLUEDOT

BLUEDOT was showcasing a few different solutions for improving QoE in the encoding and processing stage. Their DeepField-SR video super resolution product uses a proprietary deep neural network to upscale video up to 4K resolution, leveraging FPGAs. They were also showing AI-driven perceptual quality optimized video encoding.

Twelve Labs

Twelve Labs was featuring their multimodal AI for Media & Entertainment workflows, aiming to bring human-like understanding to video content. They use both video and audio information to inform object and event detection and indexing. This enables you to easily find moments in a video, like when a certain player scores or when a product is mentioned. They also power generative text descriptions of videos and clips. Their solution seemed more flexible than others I saw and can be integrated into media asset management systems, editing software or OTT streaming workflows.

Conference Sessions and Presentations

Beyond the Hype: A Critical look at AI in Video Streaming

In this session, as the title suggests, Jan Ozer took a close look at the current state of AI applications for video streaming workflows. He conducted several interviews with executives and product leaders ahead of NAB and shared his notes and links to the full interviews. He also called out a few times that many of the companies featured, including Bitmovin, have been researching and working on AI-powered video solutions for several years now, even before the current wave of hype. He shared Bitmovin’s new Analytics session interpreter and our Super Resolution capabilities, which you can hear more about in his interview with our VP of Product, Reinhard Grandl.

Some other things that stood out for me included Interra Systems’ BATON Captions, which uses natural language processing to break text in a more natural, human readable way. This is a small, subtle feature that can really make a big difference in improving accessibility and the viewer experience, that I haven’t heard anyone else focus on. DeepRender also caught my attention with their claims of an AI-based video codec that will have 45% better compression than VVC by the end of 2024. That’s a really bold claim and I’ll be watching to see if they live up to the hype. Video of the session is available here, thanks to Dan Rayburn and the Streaming Summit.

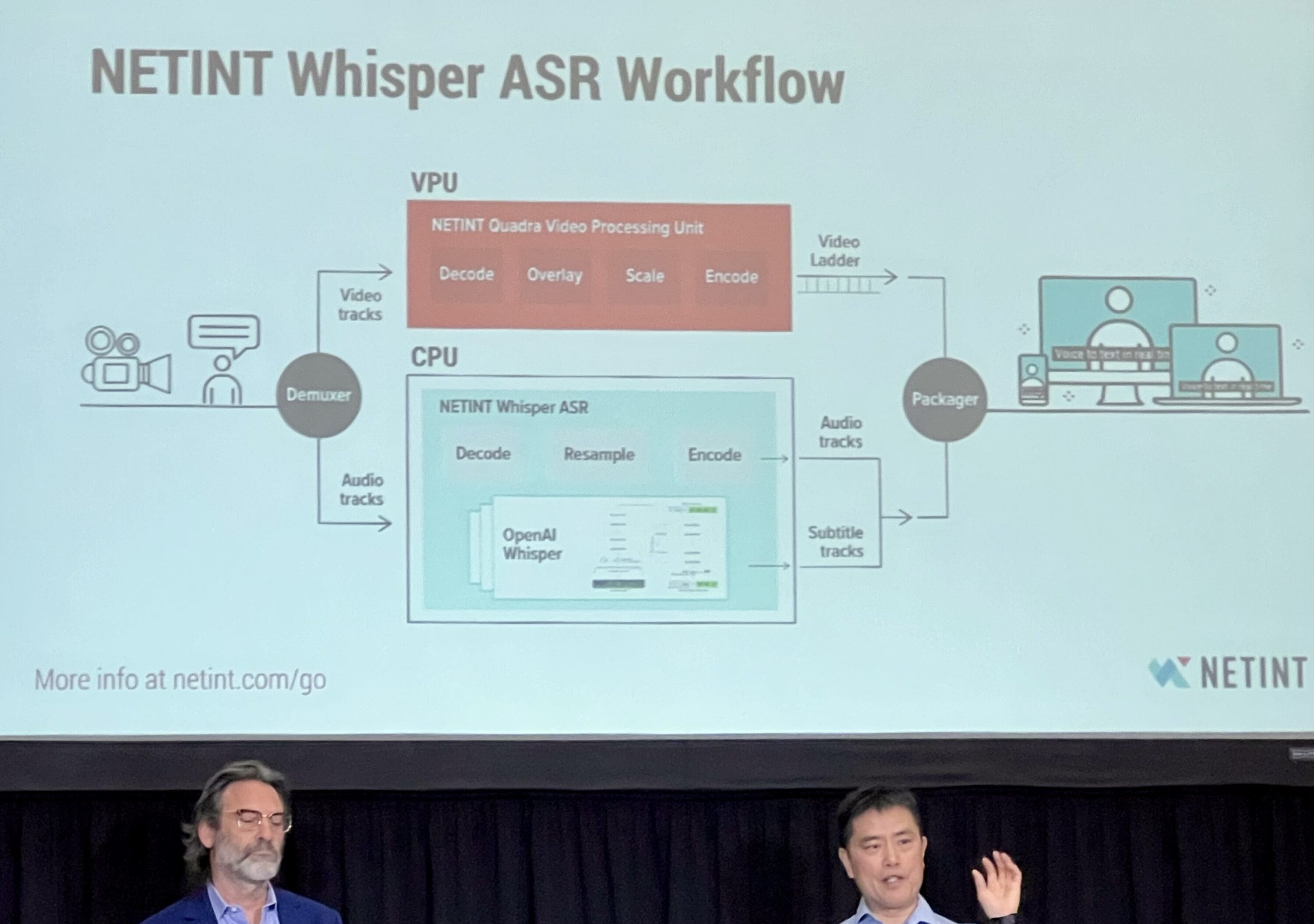

Running OpenAI’s Whisper Automatic Speech Recognition on a Live Video Transcoding Server

This was a joint presentation led by NETINT’s COO Alex Liu and Ampere’s Chief Evangelist Sean Varley. They presented a practical demo of real-time live transcoding and subtitling using NETINT’s T1U Video Processing Unit (VPU) together with Ampere’s Altra Max CPU running OpenAI Whisper. The NETINT VPU is capable of creating dozens of simultaneous adaptive bitrate outputs with H.264, H.265 and AV1 codecs. The Ampere processor was being positioned as a more environmentally-friendly option for AI inference workflows, consuming less power than similarly capable GPUs. While there were some hiccups with the in-room A/V system, the live captioning demo was impressive and worked very well. Video of the session is available here, again thanks to Dan Rayburn and the Streaming Summit.

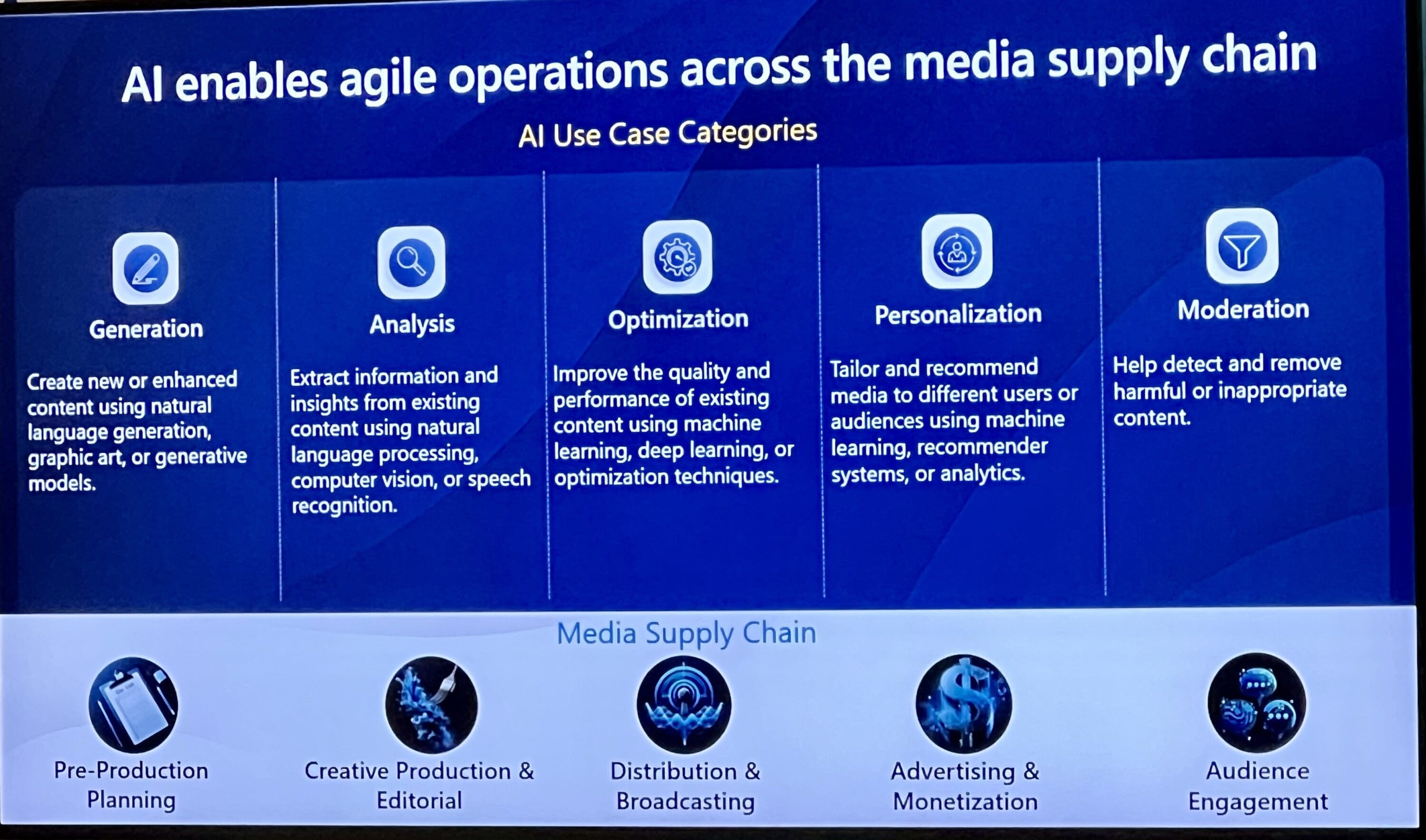

Leveraging Azure AI for Media Production and Content Monetization Workflows

Microsoft’s Andy Beach and MediaKind’s Amit Tank led this discussion and showcase of using genAI in media and entertainment workflows. They discussed how AI can help with each part of the production and delivery workflow to boost monetization. This included things like brand detection, contextual ad placements, metadata automation, translations, captioning and personalization. One area they discussed that I hadn’t heard anyone else talk about was using AI for content localization, not just for language translation via captions and dubbing, but for compliance with local and regional norms and in some cases regulations. For example, some areas and countries may prefer or even require removal or censorship of things like alcohol and drug use or guns and excessive violence, so AI can help automate content preparation in different ways for a global audience. They also shared their own personal “most-used” AI applications, which included Microsoft’s Copilot and related AI add-ons to Teams and other Microsoft products.

Did you see an interesting or innovative use of AI at NAB that wasn’t mentioned here? Please let us know in the comments!