HDR support is critical to video workflows at nearly every stage, starting from encoding to playback. When an organization makes the inevitable switch from SDR vs HDR, it becomes clear that the video quality of experience (QoE) improves significantly. As HDR support is gradually becoming ubiquitous in the general streaming space, it’s clear that OTT services need to provide HDR content to remain competitive as consumer technology reaches resolution saturation, and begins to focus on pixel depth in its stead.

The reality though, is that HDR has only been commercially available since 2014 when Dolby launched their Dolby Vision format, but even with that, most contents wasn’t being produced with end-to-end HDR distribution in mind until fairly recently, meaning the majority of videos out there are still stuck in the past with the limitations of SDR.

SDR vs HDR – to Convert or not to Convert, that is the question

So what do you do with all of your existing SDR content? Can it be converted to HDR? In a few ways, yes, though results will vary depending on the chosen approach. There’s also the old adage “quality in, quality out” or the less optimistic “garbage in, garbage out” meaning that even with the best solutions, the quality of the source material is going to impact the quality of the final product. Existing compression artifacts or camera noise have the potential to be magnified and become more noticeable when upconverted, requiring extra “clean-up” pre- or post-processing steps. Thus one must ask the question, should SDR content be converted to HDR in the scenarios that it could? Even if an SDR source file has been successfully converted to the HDR specifications, it might still look exactly the same, depending on the technique applied, so let’s take a closer look at what needs to be done and the available options to create noticeable and worthwhile upconversions.

How does SDR become HDR?

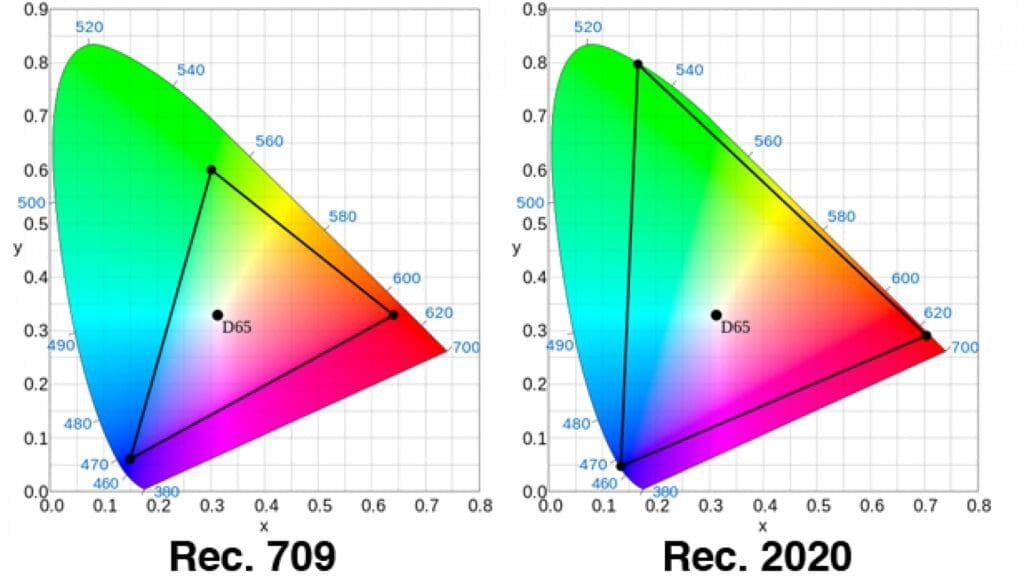

There are two things that need to happen to transform SDR video into the HDR domain. First, the color gamut has to be widened to the desired color space, usually from the standard Rec. 709 to the wider Rec. 2020, which represents a spectrum of color possibilities much closer to the capabilities of what the human eye can detect.

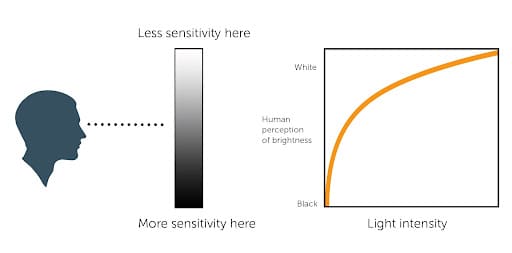

In addition to better matching the color detection potential of the human visual system, HDR video also aims to more thoroughly mimic and take advantage of our brightness and contrast-detection capabilities. The human eye has evolved to have greater sensitivity for noticing differences in darker tones than it does for lighter ones, creating a non-linear relationship between actual increases in brightness and the increases we perceive.

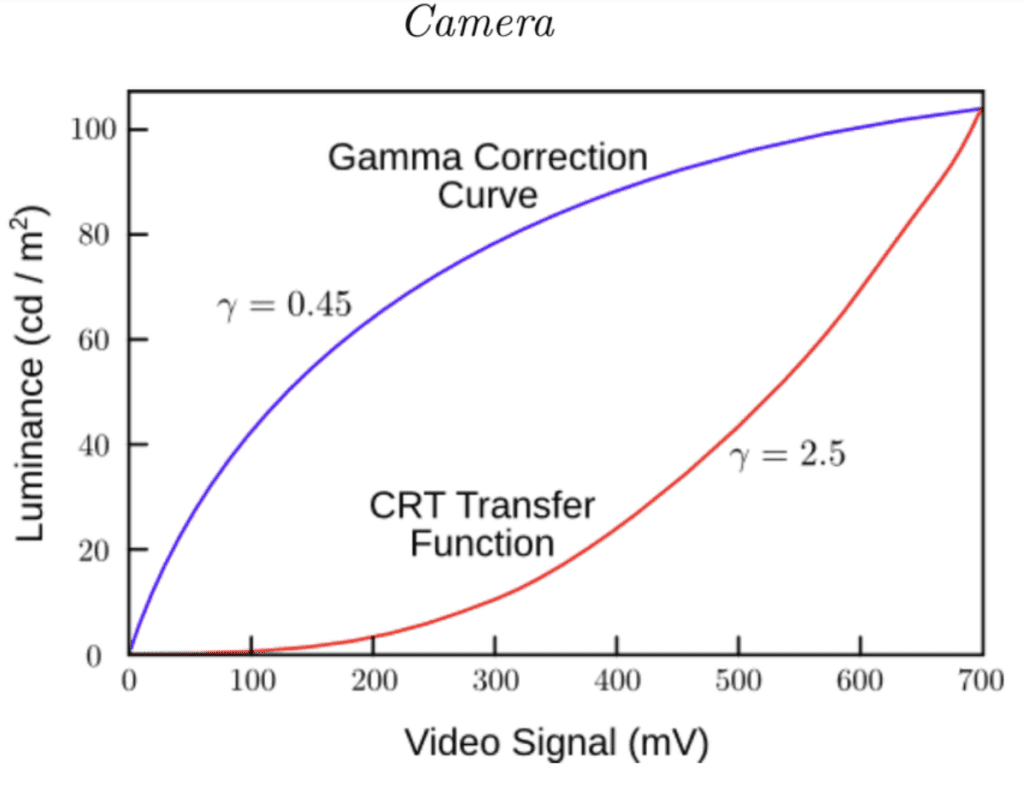

If that disparity is not taken into account, bandwidth will be wasted on areas with bright highlights where we are unable to notice any difference, while the darkest regions will be underprovisioned, resulting in loss of detail and visual quality. The solution is to apply what’s known as a gamma function or gamma correction process. This begins when an image or video is captured, with gamma encoding being applied by the camera in order to prioritize and preserve the details and light levels humans can appreciate. Gamma encoding was originally developed to compensate for the non-linear light intensity produced by CRT monitors but is still in use today as monitors with different underlying technologies employ different gamma decoding or gamma expansion functions to reproduce the captured source or artist’s intent as accurately as possible.

The chart below shows a typical camera gamma correction curve and corresponding transfer function used by a CRT monitor to compensate and display a scene that matches what the human visual system would have produced.

In 2011 the ITU adopted BT.1886 as the recommended gamma function for flat panel displays used in HDTV studio production. This standard models the response of CRT monitors better than previous functions, enabling a more consistent viewing experience and also takes into account the black level capabilities of the device, allowing clearer distinctions in the darkest areas for SDR video.

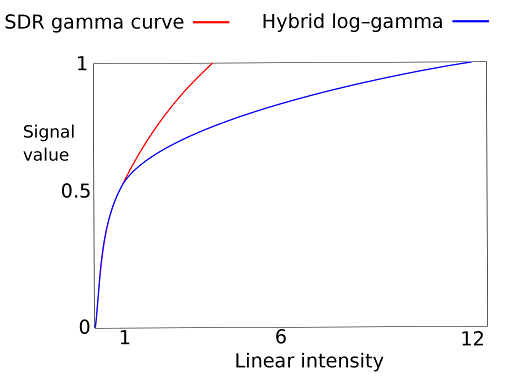

In order to complete the conversion from SDR to HDR, the gamma function needs to be translated, usually from the discussed BT.1886 to either Perceptual quantizer(PQ) or Hybrid log-gamma(HLG), depending on the desired final format. PQ was developed by Dolby and is the gamma function used by the Dolby Vision, HDR10 and HDR10+ formats. The HLG HDR format shares its name with the gamma function it employs and was jointly developed by the BBC and NHK for broadcast applications. As the name suggests, HLG uses a hybrid approach for its gamma function, combining the SDR gamma curve with a logarithmic function for the HDR range, allowing HLG content to be viewed as intended on both SDR and HDR capable monitors.

Direct Mapping vs Up-Mapping

The simplest approach to converting SDR to HDR involves directly mapping the equivalent color and brightness values, essentially encapsulating the SDR signal in an HDR container, along with associated metadata. This is the most computationally and cost-effective method to complete the technical conversion to HDR, but the video being identified as HDR will look the same as the SDR source which can lead to an inconsistent and disappointing end-user experience.

The preferred method from a quality perspective is known as up-mapping or inverse-tone mapping and creates an “HDR-look”, allowing SDR clips or shows to blend into an HDR production or platform. This is achieved through the use of tone-mapping filters or Lookup tables (LUTs) that have been extensively tested and calibrated to accurately recreate and enhance SDR content in the HDR domain. Up-mapping is more computationally complex, and thus more expensive than direct mapping, but the visual difference is significant and necessary for a true SDR to HDR workflow.

Improving Quality AND Experience

Streaming services with a mixed catalog of old and new, SDR and HDR, should strongly consider up-mapping their SDR content to match the look and format(s) of their HDR content. It’s the best way to ensure the highest quality, most consistent and enjoyable viewing experiences across their entire library. In a crowded market, it will elevate and differentiate their service.

When switching between displaying SDR and HDR content, there may be flickering or flashing as monitors auto-detect and recalibrate, so at the very least, providers with blended SDR/HDR content should be direct mapping SDR to HDR to maintain consistent technical specifications for the display. This is an important consideration for services that have HDR content in combination with ad-supported subscription tiers, who should be confirming their ad inventory is conditioned to match the HDR specs of their programming so they’re not amplifying the disruptions the ads are already creating.

The Future is in HDR

We are living through a transitional period of mixed content and no doubt will eventually reach a point where everything is being produced in HDR, there’s universal playback support, and all of the legacy SDR videos deemed worthy will have been upconverted. Streaming industry analyst Dan Rayburn sees HDR as a key advantage and predicts we’ll see the fastest rates of adoption in streaming services over the next 2 years. It’s really not a matter of if, but when, so any content that has long-term appeal should be upconverted to HDR as soon as possible to maximize the lifetime value of that process. Keep an eye out for our upcoming post about how to streamline your HDR encoding workflows with Bitmovin, including examples for upconverting SDR to HDR with the Bitmovin encoding API.

In the meantime, if you want to find out more about SDR vs HDR and the best upconversion approach for your service, get in touch today or check out one of these other great resources:

[Blog Post] HDR vs SDR: Why HDR Should Be Part of Your Video Workflow

[On-Demand Webinar] Deploying HDR for Streaming Services: Challenges and Opportunities

[On-Demand Webinar] How to Enable and Optimize HDR Encoding