What is the problem with async code?

As every developer knows, writing concurrent code is hard. You could even ask any project manager, and they would agree the bugs in whichever project they are managing, which are the hardest to figure out and fix, are usually solved when the programmers diagnose a race condition of some kind.

This is not restricted to one programming language either. More or less every language that is older than about 10 years and based on a procedural or OOP approach seems to have problems in this area. In Javascript, these problems are very much present when writing async code.

For instance, let’s consider the Bitmovin MSE demo. In this very simplified example, to avoid problems, all the steps are scheduled in sequential order, using callbacks. The opening of the MSE triggers the downloading of the first segment, which then triggers the appending of the segment to the source buffer, which triggers the downloading of the second segment, and so on.

To get things ready a bit faster, It would make sense to start downloading the first segment at the same time as opening the source buffers, as playback depends on both of those things, but they are independent of each other. In this case, we could use Promise.all and start both things at once, but we already run into a problem: if opening the source buffers fails, the download will continue regardless. So we have exchanged one problem, waiting unnecessarily to start the first download, for another, which is to start downloading unnecessarily and throw away the data, or worse, keep it somewhere and cause a memory leak.

Of course, in every specific situation, a fix can be implemented by carefully making sure that error conditions are covered properly, so the problem cannot occur. In Javascript specifically, this means careful orchestration of promise chains, so nothing is left dangling – executing when it shouldn’t, and all stale data is cleared.

A more general solution would be better. Luckily, there is one.

What is structured concurrency?

The kind of problem we examined above is fairly common in applications that are data-heavy and highly dynamic. Web video players are both of those things, so here at Bitmovin, we have had our fair share of interesting work in this area. Therefore we always have an eye out for fresh approaches to solving this type of problem. So, when we discovered structured concurrency, we felt we had to take a look.

Structured concurrency is a very obvious idea, in hindsight. It’s simply the recognition of the fact that when programming languages allow the programmer to do certain things, certain problems can arise, which can be of various types.

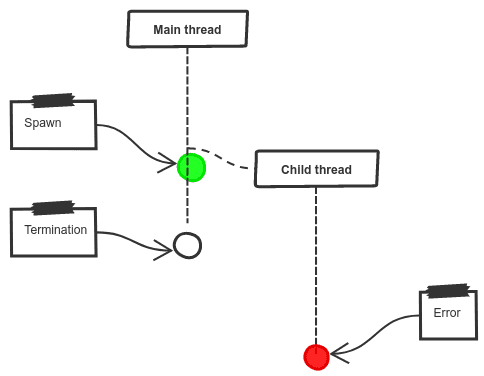

In cases where a parent starts a parallel child execution, the parent can terminate before the child does. This may mean that errors in the child are simply not handled anywhere. This can cause a variety of problems, including deadlocks and application crashes.

An example of the main thread terminating before the child thread. In this case, the language has no place to send the error message or exception.

Similarly, two parallel executions (like in our download and MSE initialization example) can result in a situation where one continues executing even when the other has already thrown an error. There may be code that is waiting for the result of both executions, in which case the result of the second execution will never be used.

For an in-depth discussion of these problems, the NJS blog on structured concurrency provides good examples as well.

How does structured concurrency work?

Structured concurrency, then, is simply a set of rules that should be followed to avoid these types of errors.

- All asynchronous code should have an explicit parent context, which should not terminate execution until all child contexts have.

- All errors must propagate up until they are handled

- Due to the first rule, termination must also propagate down, meaning that any children of an execution context must be terminated when an error occurs in that context.

What these rules describe is a tree of concurrent execution that it is possible to reason about. Because the programmer knows that any results will be brought into the parent scope, and any errors can also be handled there, the thread execution, just like a normal function call, can be treated like a pure function of its inputs and used as a building block for more abstract tasks.

Even more interesting is that because these rules are just conventions, it is possible to write a framework that implements them, even in the absence of specific language support. Users of such a framework will get the benefits of a more efficient programming model.

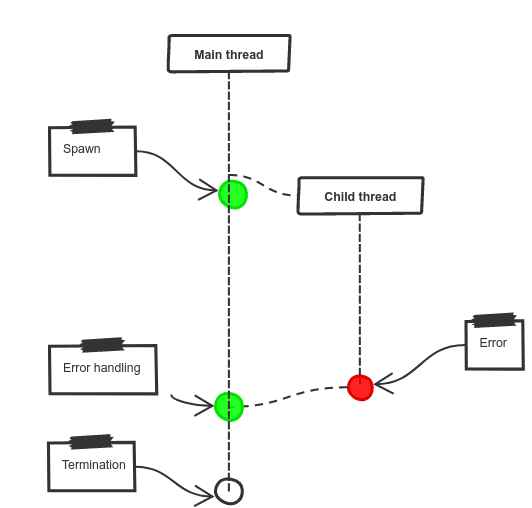

With structured concurrency, the main (or parent) thread must wait to terminate until all child threads have terminated. This means that the error can be bubbled up.

At Bitmovin, we have been working on exactly this: a structured concurrency framework for dynamic, data-heavy applications in Typescript that will support our work on video player technology.

Using our framework, our example from above is not modified in any significant way from the developer perspective. We would still run both asynchronous processes in parallel (via an equivalent to Promise.all). However, should one of them throw an error, the other would be automatically cancelled, and all contextual data cleaned up. This means that it becomes completely trivial to simply wait for the result of both executions, then continue pushing the segment into the MSE.

But it gets better. Because it is possible to reason about asynchronous executions, it becomes possible to compose them. This, in turn, means that it becomes possible to design complex data-heavy and concurrent applications, like video players, with no fear of implementing complicated concurrency bugs at the same time. We know this because we already wrote a video player using our framework, and not only did it have no concurrency bugs, it performs remarkably well.

In our next blog post, we will go further into the advantages of structured concurrency and how we’ve used it in the right places to solve problems in a way that pays off over time. To showcase this, we will show results from our new Player as a proof of concept and present benchmark numbers.