The Bitmovin Player is now equipped with facial recognition software that allows the player logic to adapt to viewers behavior.

A Little About User Interfaces

Ever since the world’s first computer, ENIAC (Electronic Numerical Integrator and Computer), went online in 1946, computers have been designed to respond to human input. ENIAC’s human interface was a set of large plugboards that resembled a telephone exchange. Soon after that the keyboard became the standard input mechanism, and then with the invention of the GUI more intuitive devices were developed such as the mouse, the trackball, the stylus and then later the touch screen. In the last decade the development and scope of human interface technology has expanded beyond hand controlled input devices to include movement sensors, voice control and video recognition. This last point, video recognition, has been the focus of some exciting feature development in the Bitmovin Player, opening up new opportunities for video content providers to interact with their audience.

What is Video Recognition Software?

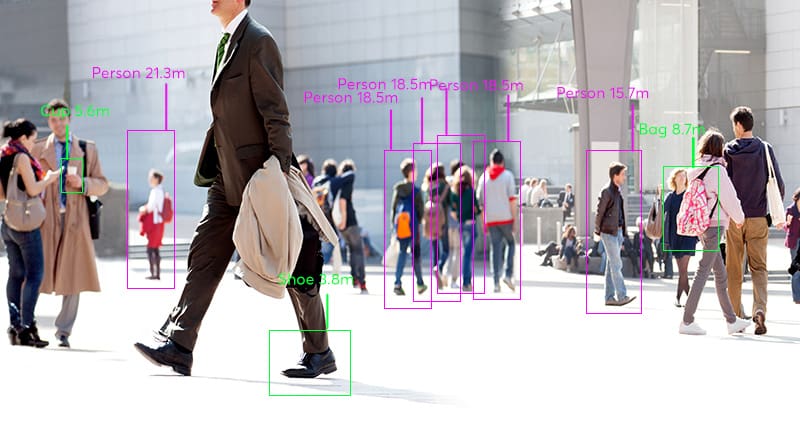

To explain it simply, video recognition software can analyse a video and identify specific objects within the video and then track them as they move through the frame. The word “object” for these purposes could be anything that the software has been taught how to recognize. This could include people, letters or numbers, cars or animals or any number of other visually elements within the video. Facial recognition (sometimes called face detection) is a related technology that has has it’s development accelerated with the help of artificial intelligence and can be used, not just for identifying people, but also for detecting specific facial expressions or facial movements. This level of recognition can be very important for the context of your video content and create significant opportunities to increase your viewers QoE.

How we used this in the Bitmovin Player

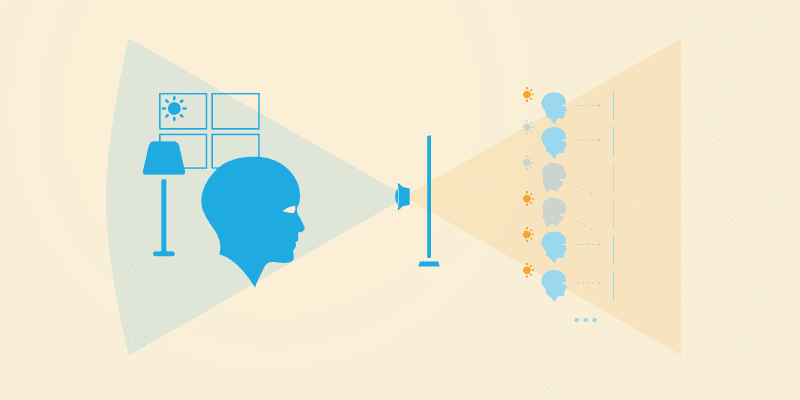

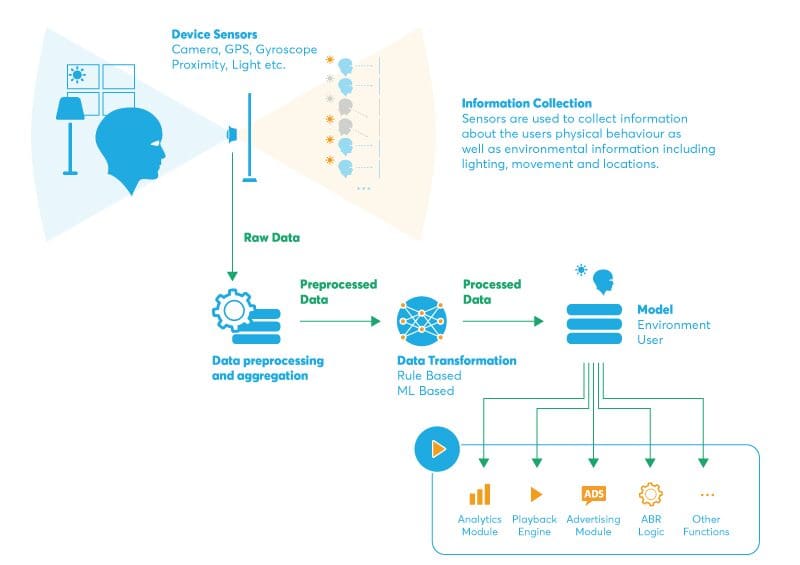

User Aware Adaptation is a feature that we have built (patent pending) by collecting data about the user itself and how the user is interacting with the video application. This data which is collected from the devices sensors is processed and used to build a realtime-updated model of the user and the users environment. With that system in place, the video player can use rule-based or machine learning mechanisms to drive decisions in a wide variety of modules and functions including: analytics, playback, advertising and adaptive logic.

Optimizing Your Video Delivery for Each User

Video delivery optimization is one of the first practical applications that we have implemented with Bitmovin’s User Aware Video Delivery feature. This feature takes the user’s physical behaviour into account and adds that to the Bitmovin Player’s adaptation logic. This means that the player can make smart bandwidth saving decisions when opportunities arise. For example it can reduce the video quality (bitrate) and save bandwidth when it detects that the viewer is further away form the screen, or when the user is involved in a secondary activity and probably only listing to the audio, such as cooking while they watch a cooking show.

Increasing Quality of Experience

Another opportunity created by this feature is to improve your QoE score. Assuming your audience is comfortable with this level of communication and they agree to, or enable the features, then there is a huge range of creative possibilities in this category. You could increase the bitrate when you see the user is coming closer to the screen. You could pause the video if you see that the user has left the room, or fallen asleep and then resume when he or she returns their attention to the screen. You could reduce the volume when you see that the user has answered a telephone call and then return to normal volume when the call ends. You could even start to analyse the emotional reactions of your viewers and make content suggestions based on whether they laughed harder at Steve Martin or Robin Williams. Another possibility would be to build gesture controls into your player. This technology would theoretically allow you to create a video player that is driven by hand or even head gestures.

Optimizing Your Advertising Revenue

Obviously the potential for increasing ad revenue is another draw card for this new technology. By intelligently controlling the timing of your advertising, you can ensure that your ads are shown at times when they will have maximum impact. An obvious rule you could build into your workflow would be not to show ads if the viewer is out of the room or asleep. Other examples could be to show ads when the viewer has shown a positive facial reaction to a product placement within the video, or not to show specific advertising if the user is showing signs of distress or discomfort.

What’s Next for User Aware Video?

Bitmovin is a very customer driven company and our development roadmap is often guided by customer needs. User Aware Video is one of the exceptions. We have built this feature because we can see the huge number of practical applications and optimizations that is enables. The Bitmovin team is excited to see how our customers will put it to use, and what the results will be. If you are interested in finding out more about User Aware Video, contact the sales team for a demonstration.

Video technology guides and articles

- Back to Basics: Guide to the HTML5 Video Tag

- What is a VoD Platform? A comprehensive guide to Video on Demand (VOD)

- Video Technology [2022]: Top 5 video technology trends

- HEVC vs VP9: Modern codecs comparison

- What is the AV1 Codec?

- Video Compression: Encoding Definition and Adaptive Bitrate

- What is adaptive bitrate streaming

- MP4 vs MKV: Battle of the Video Formats

- AVOD vs SVOD; the “fall” of SVOD and Rise of AVOD & TVOD (Video Tech Trends)

- MPEG-DASH (Dynamic Adaptive Streaming over HTTP)

- Container Formats: The 4 most common container formats and why they matter to you.

- Quality of Experience (QoE) in Video Technology [2022 Guide]