What is CMAF?

In February 2016 at the 114th MPEG meeting in San Diego, an input contribution was registered providing a proposal for a “common media format for segmented media” signed by a number of major companies. This document proposed a Media Application Format (MAF) based on the ISO Base Media File Format (ISOBMFF) and other MPEG standards for segmented media delivery which later became the MPEG Common Media Application Format (CMAF; officially MPEG-A Part 19 or ISO/IEC 23000-19).

In this blog post we will take a closer look at CMAF and how it actually relates to existing over-the-top (OTT) deployments.

MAF – Quo Vadis?

Before going into details about CMAF, I’m not sure everyone is familiar with MPEG Media Application Formats (MAFs) even though CMAF is already the 19th MAF MPEG has specified so far (the first MAFs were specified approximately ten years ago). The purpose of MAFs is defined in Part 1 of MPEG-A (ISO/IEC TR 23000-1:2007) “by selecting readily tested and verified tools taken from the MPEG body of standards and combining them to form an MAF.

This concept builds on the toolbox approach of existing MPEG standards.” That is, an MAF does not define new technology but selects parts and profiles of existing MPEG standards as appropriate for the envisioned application.

CMAF Scope

Now that we know what an MAF is, we can start to answer the question, what is CMAF?. In June 2016, ISO/IEC MPEG issued the committee draft (CD; subject to national body comments) of the CMAF with the aim to allow a single media encoding to be used across many applications and devices. Therefore, CMAF defines the encoding and packaging of segmented media objects for delivery and decoding on end user devices in adaptive multimedia presentations. In particular, this is (i) storage, (ii) identification, and (iii) delivery of encoded media objects with various constraints on encoding and packaging. That means, CMAF defines not only the segment format but also codecs and most importantly media profiles (i.e., for AVC, HEVC, AAC).

While this reminds us a lot of MPEG-DASH and the Interoperability Guidelines/Points (IOPs) of the DASH-IF, it’s mainly aligned with Apple’s recent announcement to support fragmented MP4 (fMP4) that may ultimately lead to a single segment format for over-the-top (OTT) delivery (currently we need to support both MPEG-2 Transport Stream (TS) and ISOBMFF).

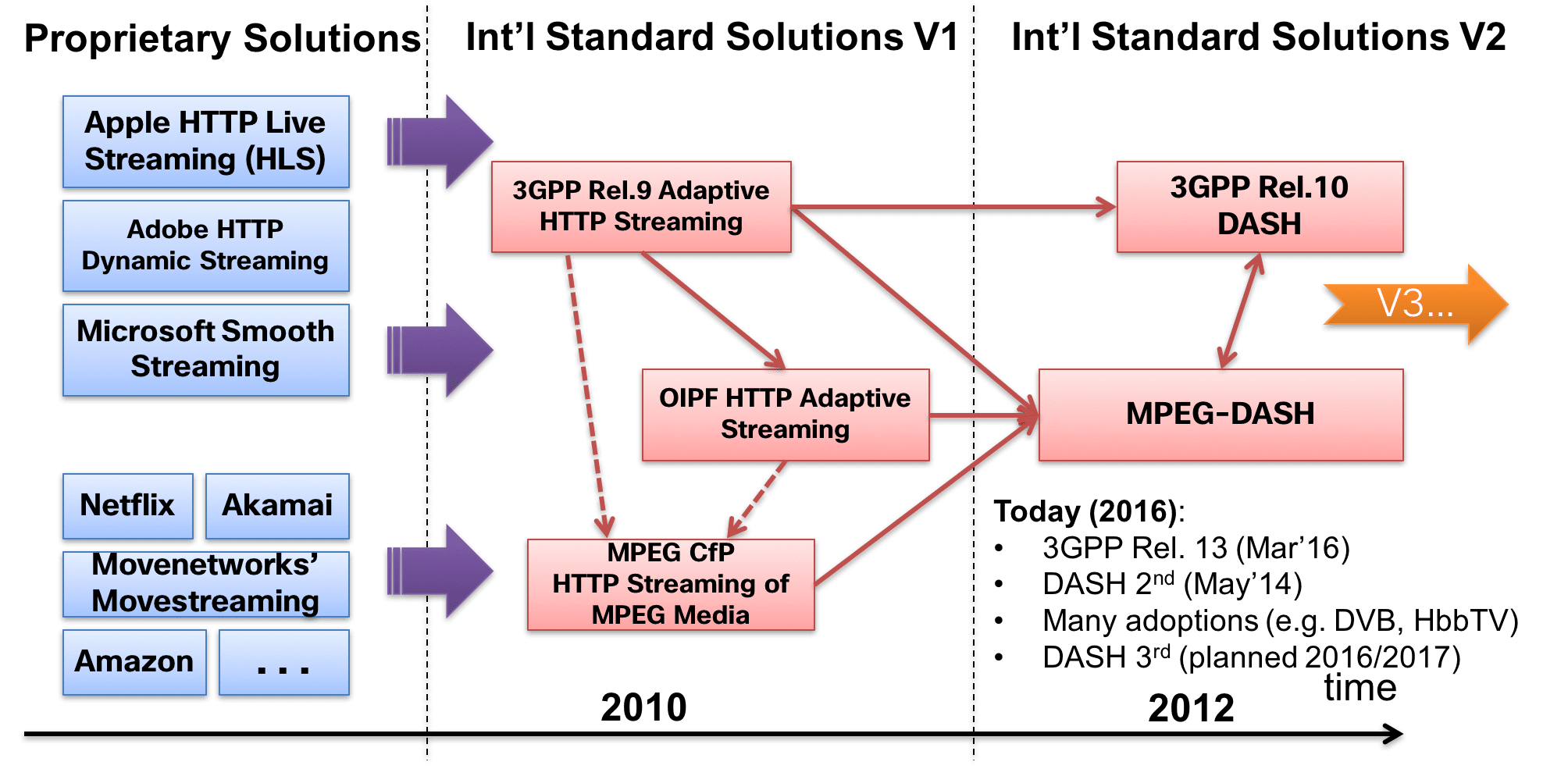

History about MPEG-DASH, HLS, and CMAF

Some time ago I created a history slide of DASH (see slide 6 here) which I’ve updated below showing the progress of MPEG-DASH and how it has been evolved over time.

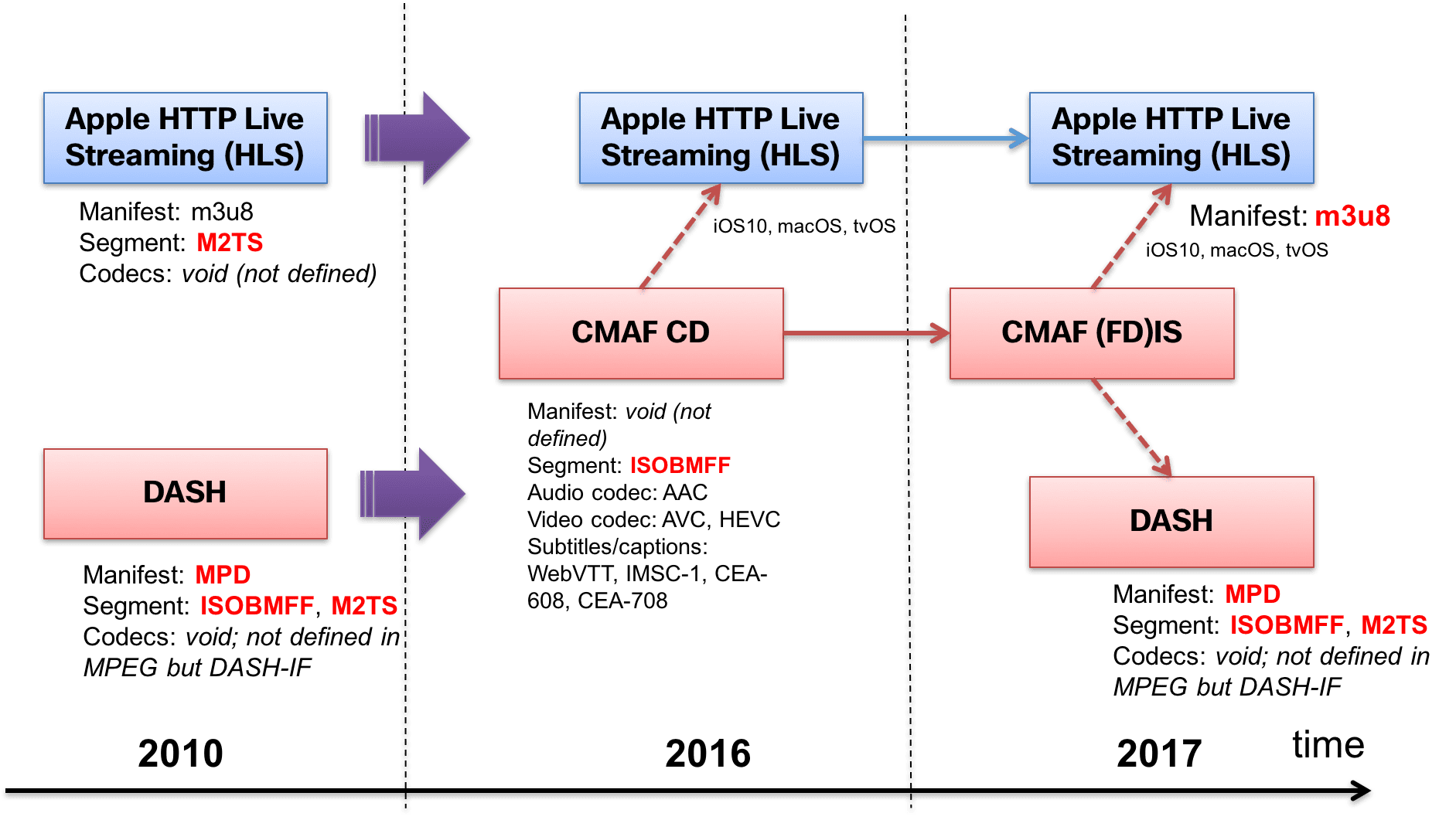

With the emerging CMAF and Apple’s announcement of supporting fMP4 (i.e., basically CMAF), I tried to do the same with DASH, HLS, and CMAF which is depicted below. CMAF basically defines the segment format based on ISOBMFF and audio-video codecs as well as subtitles/captions but not the manifest. It is assumed that any manifest that meets the functional requirements in form of a media object model can be used. In other words, m3u8 as well as MPD can be used with CMAF “segments” (note: CMAF actually differentiates between fragments, segments, and chunks – see next post for more details).

CMAF is expected to reach final draft international standard (FDIS) and consequently international standard (IS) next year (which corresponds to fast track standardization of MAFs).

Apple HLS with support for fMP4 will be supported in iOS10, macOS, and tvOS but we have already demonstrated its usage on all major Web browser platforms requiring very little/no changes in existing workflows. MPEG-DASH still supports M2TS but only ISOBMFF is used in practice (see also DASH-IF IOPs). Requiring two different manifest formats (m3u8 and MPD) is actually a minor burden compared to having two different segment formats (but the devil is in the detail).

DASH Data Model vs. CMAF Media Object Model

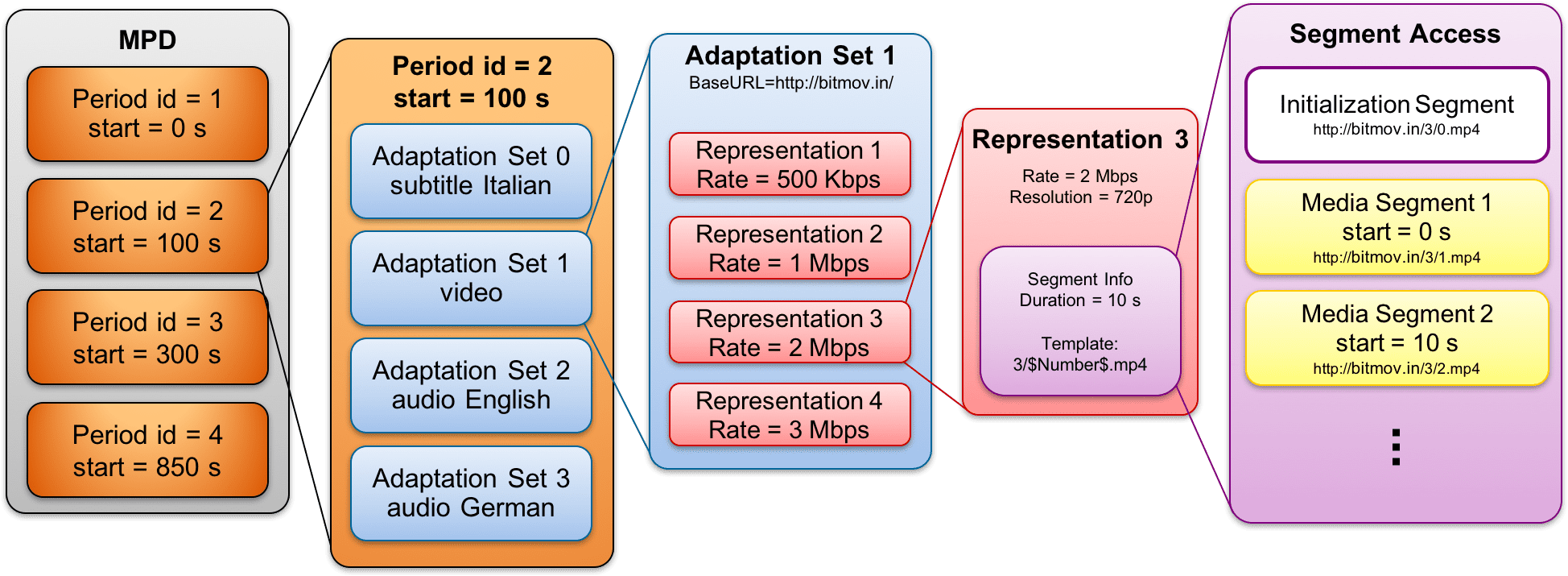

The manifest instantiates the data or media object model. Interestingly, CMAF does not define a manifest but DASH does in form of the Media Presentation Description (MPD). We have used slides as in the figure below to illustrate the DASH data model of the media presentation for educational purposes.

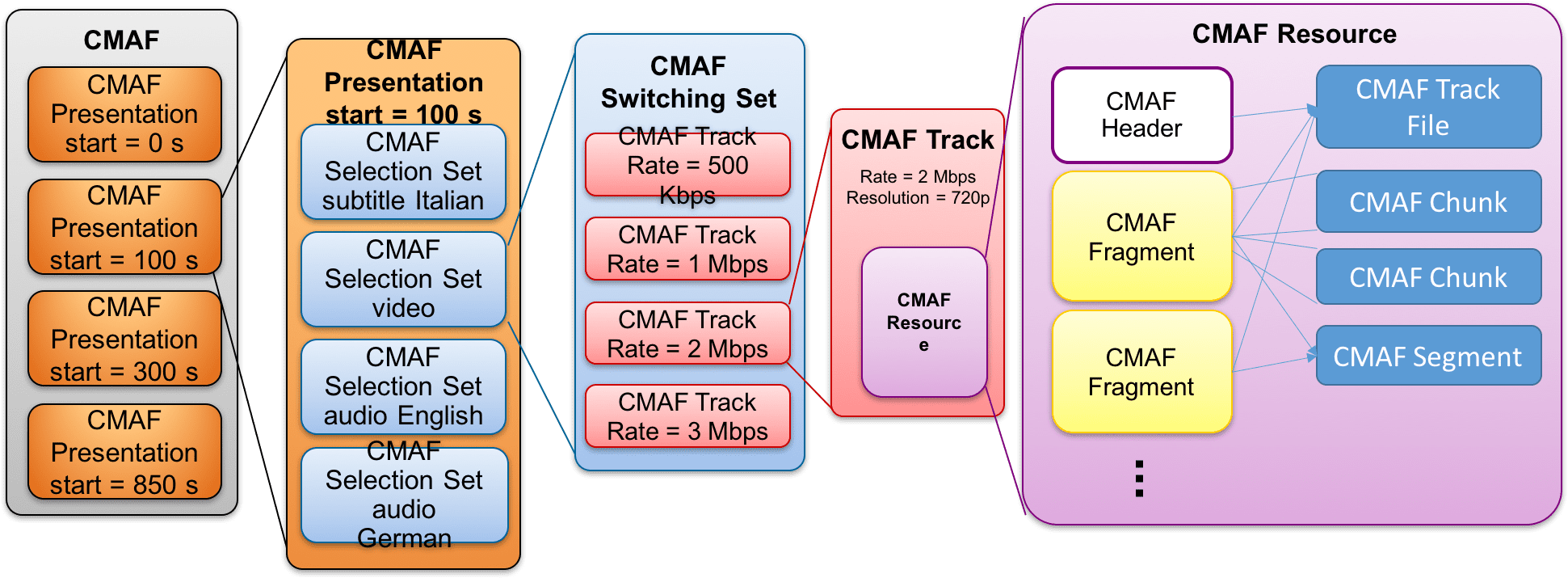

An MPD may contain multiple periods, each period may contain multiple adaptation sets and each adaptation set may contain multiple representations which provide references (in form of HTTP-URLs) to the actual segments. Although the figure above does not show all possibilities and use cases, it provides a pretty good overview of how DASH (and it’s underlying data model) works. As CMAF does not define a manifest, but any manifest can be used to instantiate the CMAF media object model, I thought it should be possible to use the same figure as above but with CMAF terms and definitions (which ultimately can be instantiated using an MPD). The result is shown below although – again – not every possibility and use case is reflected here.

Periods in DASH are presentations in CMAF but there is no concept above presentations defined in CMAF. It is assumed to be defined in the actual manifest.

Each CMAF presentation may contain multiple CMAF selection sets for component selection (e.g., codecs, audio/subtitle languages). Each CMAF selection set may contain multiple switchable CMAF tracks and, thus, it is renamed to switching set. The functionality of selection set and switching set of CMAF is implemented as adaptation set within DASH (where the @group attribute has a specific and also legacy meaning). A CMAF track basically corresponds to a DASH representation switchable (i.e., allowing for dynamic adaptive streaming) within a CMAF switching set or DASH adaptation set respectively.

DASH initialization segments are referred to as headers in CMAF. CMAF fragments are basically DASH segments (from an abstract point of view) although Annex E of the current CMAF specification provides a different view. CMAF also defines segments and chunks as well as the term CMAF resource and CMAF track (files).

Confused? Yes, indeed!

A CMAF segment many contain multiple CMAF fragments but no CMAF header and a CMAF fragment is actually an ISOBMFF segment with additional constraints. A CMAF chunk is basically a sequential and contiguous subset (i.e., a set of samples) of a CMAF fragment enabling low-latency streaming.

So what is a sample?

That’s actually defined in ISOBMFF as “all the data associated with a single timestamp”. A CMAF resource can be identified using an identifier (URI, URL) and a CMAF track file is an ISOBMFF file containing both CMAF header and fragments.

CMAF tracks are independent, i.e., contain only CMAF Fragments of the same media stream – no multiplexed content, and strictly aligned (synchronized) which each other to facilitate bitstream switching, i.e., the core functionality of dynamic adaptive streaming. However, this also requires that all CMAF fragments need to start with independently decodable media data within a switching set and a few other constraints (e.g., same height, width and position; same aspect ratio, framing (position and cropping within coded blocks), transfer function, bit depth, color subsampling, color volume, and presentation timing).

Final Remarks: Obstructions and Caveats

The CMAF media object model can be implemented using the DASH data model within the MPD but it requires some (editorial) efforts and some terms and definitions of CMAF still need to be better aligned with DASH. In practice, however, it seems that implementations are ahead of the specification. An important aspect is MPEG Common Encryption (CENC), which is also adopted by CMAF, but CMAF allows for both AES-128 CTR (Counter) and AES-128 CBC (Cipher Block Chaining) modes. In terms of manifest, HLS uses m3u8 while DASH uses MPD. The MPD is based on XML with probably a richer set of features (e.g., spatial relationship descriptor), it can be extended very easily (e.g., descriptors), and standard XML tools can be used for processing and manipulating MPDs.

Finally, we here at Bitmovin believe that with CMAF, we look into a bright OTT future with a single segment format (based on ISOBMFF) reducing deployment overhead and complexity while providing excellent Quality of Experience (QoE) for end users. But QoE is another story… stay tuned!

Video technology guides and articles

- Back to Basics: Guide to the HTML5 Video Tag

- What is a VoD Platform?A comprehensive guide to Video on Demand (VOD)

- Video Technology [2022]: Top 5 video technology trends

- HEVC vs VP9: Modern codecs comparison

- What is the AV1 Codec?

- Video Compression: Encoding Definition and Adaptive Bitrate

- What is adaptive bitrate streaming

- MP4 vs MKV: Battle of the Video Formats

- AVOD vs SVOD; the “fall” of SVOD and Rise of AVOD & TVOD (Video Tech Trends)

- MPEG-DASH (Dynamic Adaptive Streaming over HTTP)

- Container Formats: The 4 most common container formats and why they matter to you.

- Quality of Experience (QoE) in Video Technology [2022 Guide]