Part III – The Epic Battle of CPU Architectures: AMD vs ARM vs Intel

Selecting, implementing, and running a CPU architecture is one of the most critical steps to running a successful Software or Product as a Service (SaaS or PaaS) organization as it’s the basis of how service will function. At the time of this post, there are two “standard” architectures, INTEL & AMD (x86), and one up-and-coming open-source architecture, ARM. This blog is the third and final of Bitmovin’s Intern Series: The Journey from x86 to ARM. As Bitmovin strives for innovation, we’re constantly adapting newer and more efficient technologies, and to take our cloud-based encoding solution to the next level, we decided to test out a new CPU architecture and compare our current architecture, AMD’s x86 against Nvidia’s ARM.

The first blog post of this series defined the basics of CPU architectures, why we selected ARM and the complexities of moving library dependencies from x86 to ARM. The second post covered the second phase of implementation, where we defined how to apply docker tags efficiently to the new ARM-based architecture. This final post outlines the final step of our journey from x86 to ARM where I measured efficiency and cost differences between the newly adopted ARM architecture and x86 to determine the improvements we’ve achieved with our tedious project. To further vilify our decision, this review included a comparison of Intel and AMD (x86).

Experimental Setup

Encoding Setup

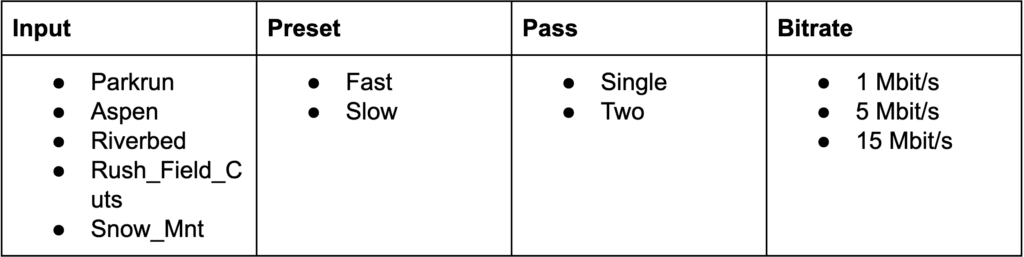

To measure the performance of each architecture (AMD vs ARM vs Intel) a set of x264 and x265 encodings were executed. The encodings were run in serial order and consisted of the permutations of the following characteristics and parameters:

The input files were obtained from https://media.xiph.org/video/derf/. All permutations of these options resulted in 60 encodings. The metric for the cost evaluation was the total combined execution time of all encodings on the target architecture.

An example of x264 command for Parkrun with preset=slow, pass=single and bitrate=5, is as follows:

x264 --preset slow --bitrate 5000 -o parkrun.h264 parkrun.y4m

Cloud Setup of the AMD vs ARM vs Intel CPU Processors

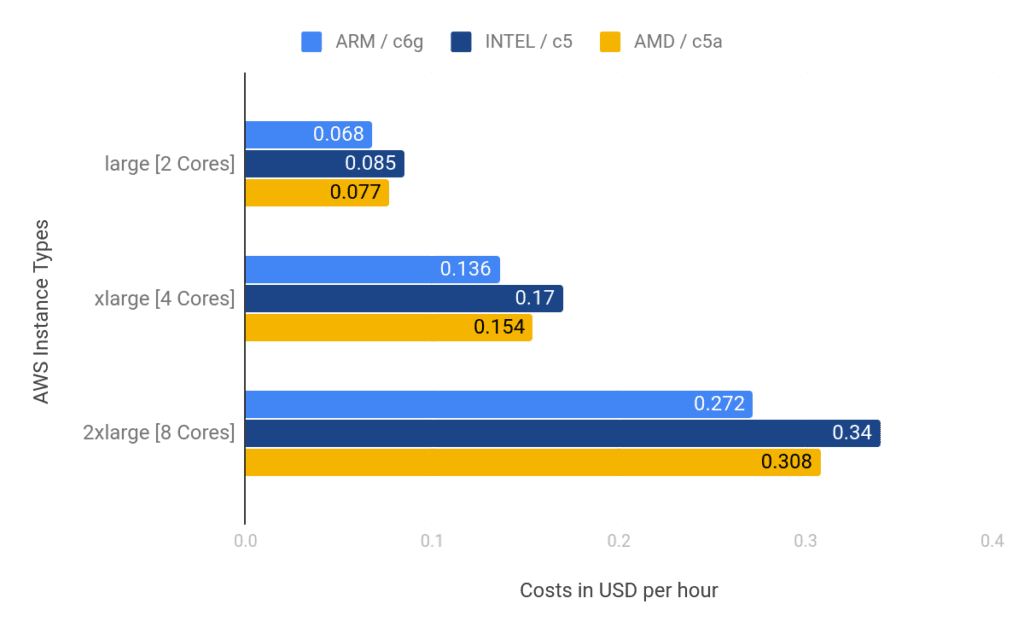

The test objects were running in a cloud (AWS) and the mainly used type was the so-called “2xlarge” instance which provides 8 CPU cores. This is the most used type by industry at the time of this post. Our tests resulted in the following cost breakout for core type (2, 4, and 8) per hour in USD.

We found that the Intel processors were the most expensive for every instance type variation followed by AMD and ARM respectively.

During the testing, the total encoding time for every test, the real-time factor, and a quality metric was measured. Also within the comparison, the hourly costs were included.

Results of the AMD vs ARM vs Intel test

The tests were taken over the course of several days and an average was calculated to make a conclusion. Below you’ll find the cost and PSNR-based quality assessments that compare the three CPU architectures against each other:

- C5.2xlarge = Intel

- C6g.2xlarge = ARM

- C5a.2xlarge = AMD (x86)

You can find the full list of Amazon instance types here

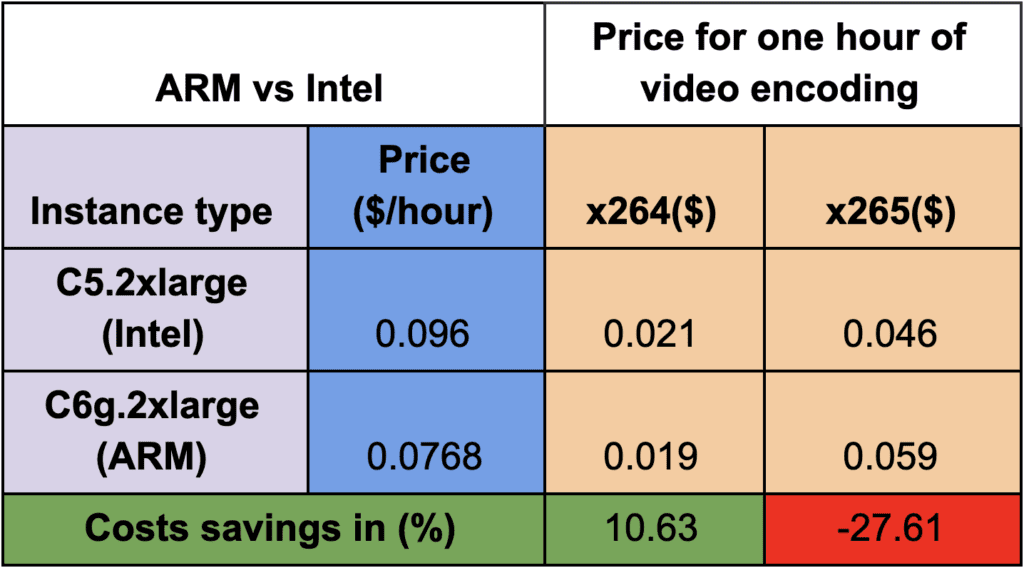

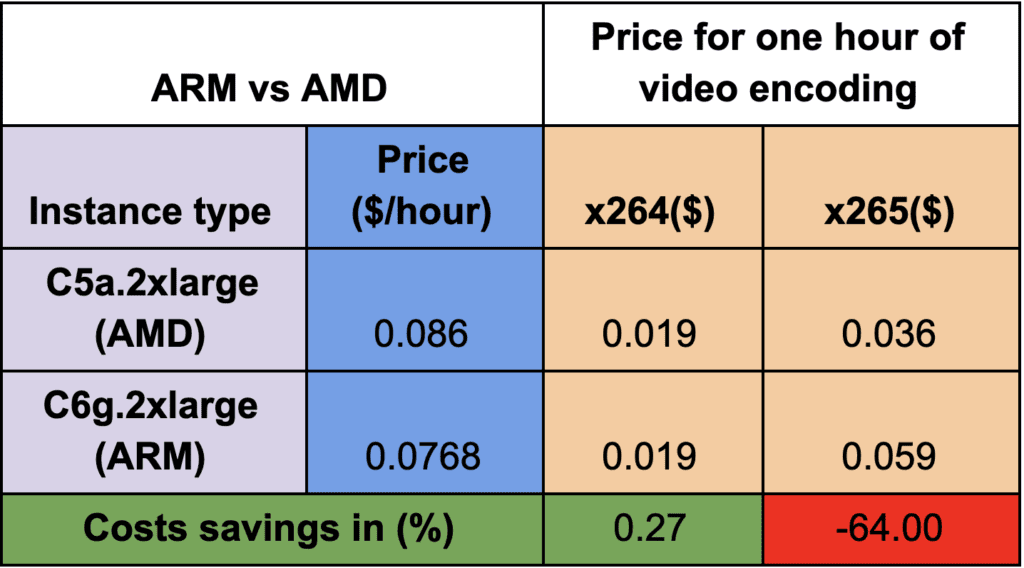

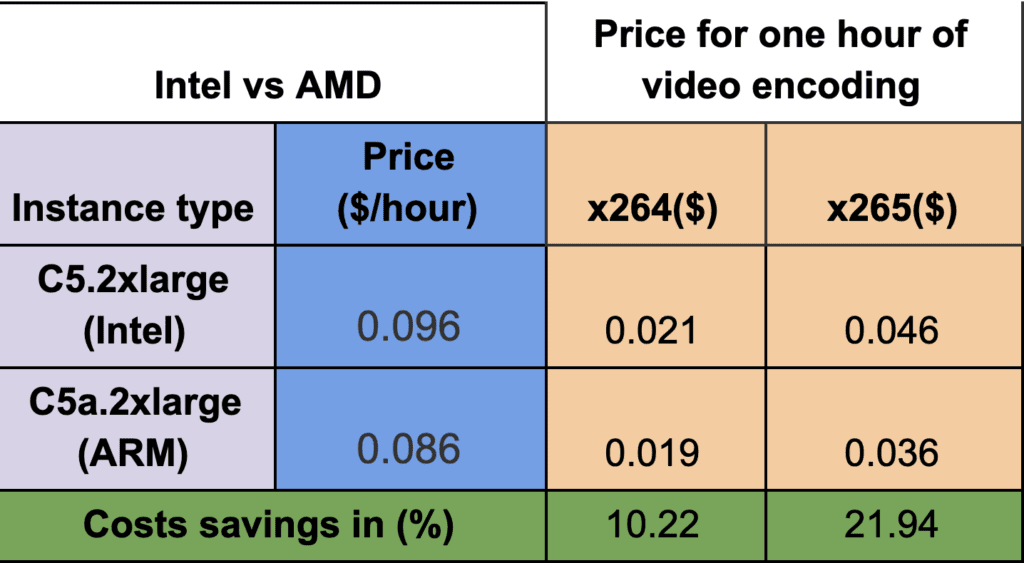

Costs

Conclusion

ARM vs x86

x264

The performance is a bit slower than x86 but since it’s way cheaper it could be profitable. The results show that it can be 10% cheaper when compared to Intel, whereas for AMD the costs are nearly the same.

x265

According to the average test results we can say that ARM is not yet optimized enough. Even though the instances are cheaper they are not able to compete with the x86 instances. This is because the encoders are not optimized for ARM. Maybe in the future when those codecs are updated it could pay off to switch but this might take a while. If the codecs get updated the test could easily be repeated since the entire evaluation process was automated by scripts and the source code is provided.

Intel vs AMD

According to the battle between Intel and AMD, we can conclude that it will pay off to switch to AMD since those instances are cheaper and also better performing in the test. By switching to AMD, we could save approximately 10% and 20% in costs for H264 and H265 encodings respectively.

Quality

During the evaluation, we measured PSNR for the various test cases. It can be concluded that there is no quality difference based on the instance type.

Future Work

Moving forward, we will begin experimenting with larger and more diverse assets, as our first set of tests only used 2-5 short assets (mostly 10-20s and one 30min). Next, we’ll need to check for various codec updates so that we can further optimize for ARM, based on the latest standards updates. Lastly, we’ll continue checking if AWS introduces newer ARM instance types that may perform better or result in even more cost savings.

So to summarize, ARM has a bright future, but it currently depends mostly on software support and if the industry decides to take the opportunity of this technology.

For the rest of the series see

- Part I – Updating Library Dependencies from x86x to ARM

- Part II – Applying Docker Tags to ARM